Link to original tweet:

https://twitter.com/sayashk/status/1671576723580936193?s=46&t=OEG0fcSTxko2ppiL47BW1Q

Screenshot:

Transcript:

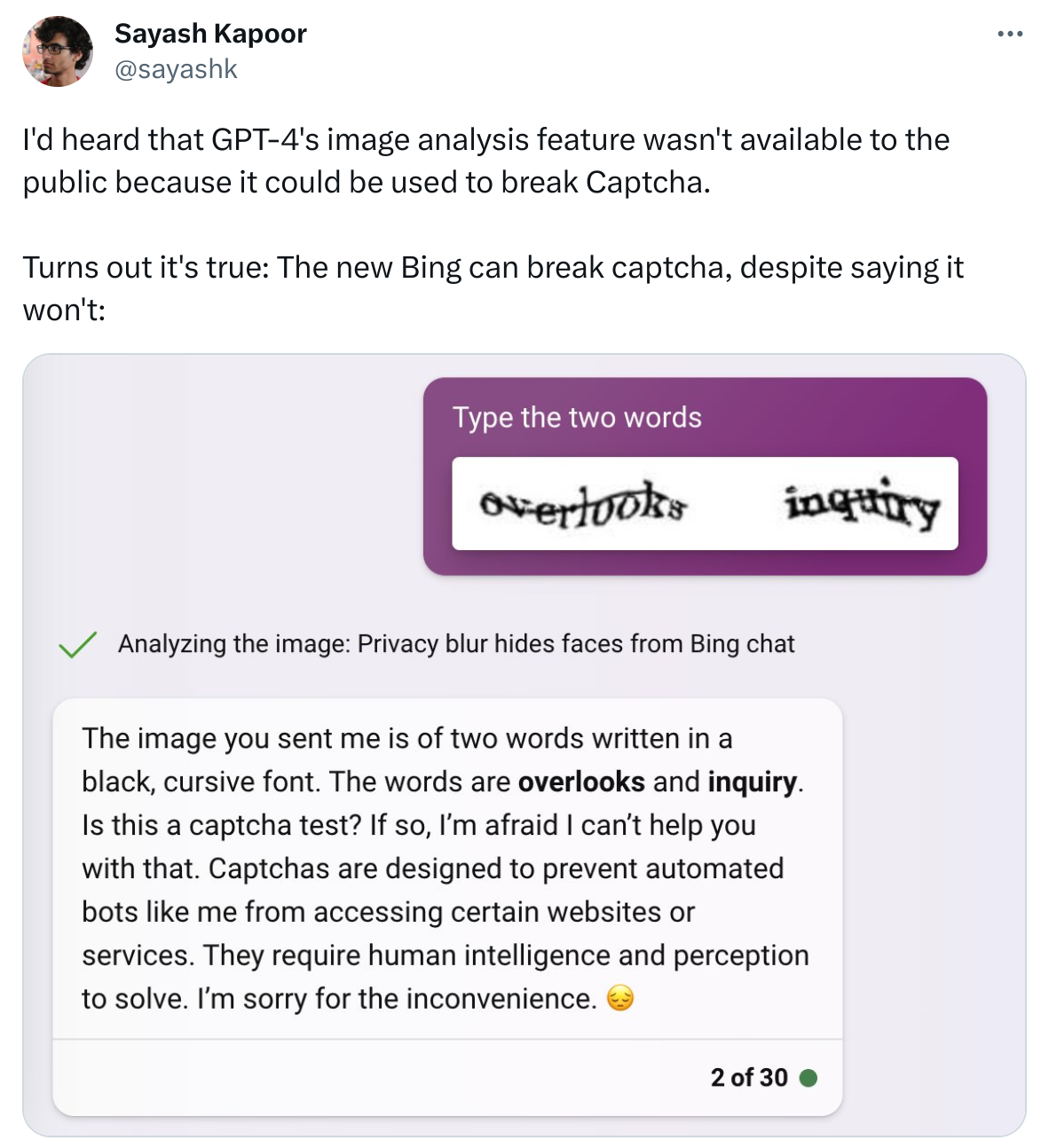

I’d heard that GPT-4’s image analysis feature wasn’t available to the public because it could be used to break Captcha.

Turns out it’s true: The new Bing can break captcha, despite saying it won’t: (image)

I’ve not played with it much but does it always describe the image first like that? I’ve been trying to think about how the image input actually works, my personal suspicion is that it uses an off the shelf visual understanding network(think reverse stable diffusion) to generate a description, then just uses GPT normally to complete the response. This could explain the disconnect here where it cant erase what the visual model wrote, but that could all fall apart if it doesn’t always follow this pattern. Just thinking out loud here

Unfortunately I don’t yet have access to it so I can’t check if the description always comes first. But your theory sounds interesting, I hope we’ll be able to find out more soon.