cross-posted from: https://programming.dev/post/177822

It’s coming along nicely, I hope I’ll be able to release it in the next few days.

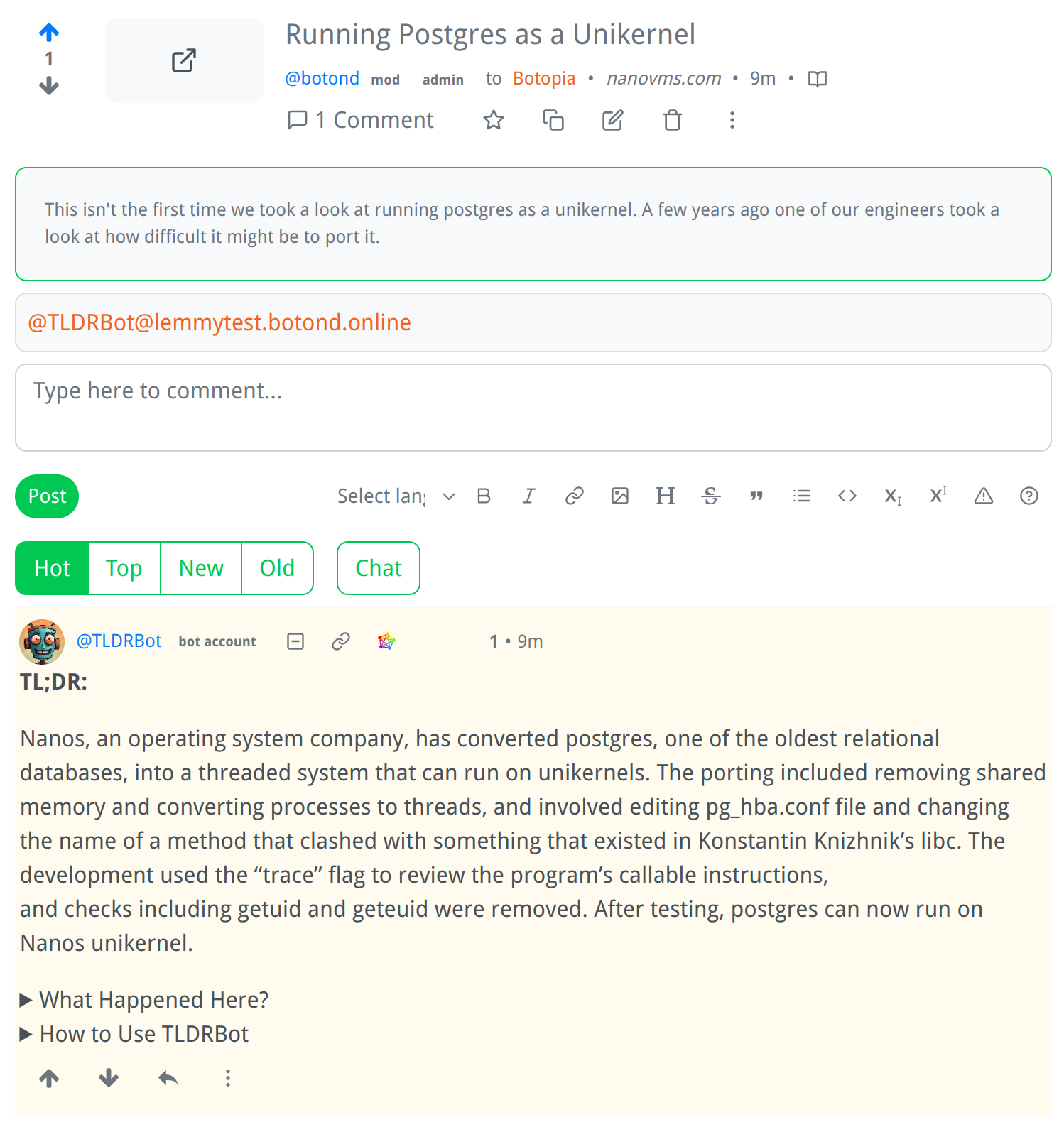

Screenshot:

How It Works:

I am a bot that generates summaries of Lemmy comments and posts.

- Just mention me in a comment or post, and I will generate a summary for you.

- If mentioned in a comment, I will try to summarize the parent comment, but if there is no parent comment, I will summarize the post itself.

- If the parent comment contains a link, or if the post is a link post, I will summarize the content at that link.

- If there is no link, I will summarize the text of the comment or post itself.

Extra Info in Comments:

Prompt Injection:

Of course it’s really easy (but mostly harmless) to break it using prompt injection:

It will only be available in communities that explicitly allow it. I hope it will be useful, I’m generally very satisfied with the quality of the summaries.

Will it work on all instances?

And how do you manage costs? Querying GPT isn’t that expensive but when many people use the bot costs might accumulate substantially

Thanks for your reply!

I am very happy to hear that you will open source it!

I am curious - have you tested how well it handles a direct link to a scientific article in PDF format?

It only handles HTML currently, but I like your idea, thank you! I’ll look into implementing reading PDFs as well. One problem with scientific articles however is that they are often quite long, and they don’t fit into the model’s context. I would need to do recursive summarization, which would use much more tokens, and could become pretty expensive. (Of course, the same problem occurs if a web page is too long; I just truncate it currently which is a rather barbaric solution.)

Thanks for your response!

I imagined that this would be harder to pull off. There is also the added complexity that the layout contains figures and references… Sill, it’s pretty cool, I’ll keep an eye on this project, and might give self-hosting it a try once it’s ready!

LLMs can do a surprisingly good job even if the text extracted from the PDF isn’t in the right reading order.

Another thing I’ve noticed is that figures are explained thoroughly most of the time in the text so there is no need for the model to see them in order to generate a good summary. Human communication is very redundant and we don’t realize it.