Personally, I have nothing against the emergence of new programming languages. This is cool:

- the industry does not stand still

- competition allows existing languages to develop and borrow features from new ones

- developers have the opportunity to learn new things while avoiding burnout

- there is a choice for beginners

- there is a choice for specific tasks

But why do most people dislike the C language so much? But it remains the fastest among high-level languages. Who benefits from C being suppressed and attempts being made to replace him? I think there is only one answer - companies. Not developers. Developers are already reproducing the opinion imposed on them by the market. Under the influence of hype and the opinions of others, they form the idea that C is a useless language. And most importantly, oh my god, he’s unsafe. Memory usage. But you as a programmer are (and must be) responsible for the code you write, not a language. And the one way not to do bugs - not doing them.

Personally, I also like the Nim language. Its performance is comparable to C, but its syntax and elegance are more modern.

And in general, I’m not against new languages, it’s a matter of taste. But when you learn a language, write in it for a while, and then realize that you are burning out 10 times faster than before, you realize the cost of memory safety.

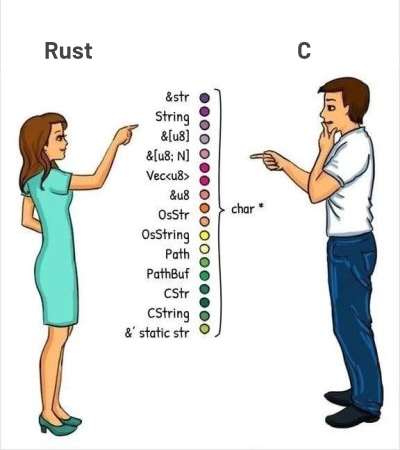

This is that cost:

max width of utf8 is 32 bits from my extensive research (1 minute of googling) so it should work, right?

UTF-8 is a variable encoding so none of the fixed sized type would work better for it.

But it would work

It would no longer be UTF-8; it would be UTF-32. UTF-8 is an encoding scheme, meaning that it is a specification for exactly how text is encoded as bits.

You can certainly use UTF-32 to represent all valid unicode, but you can only do that within the bounds of a single program; once you need to read or write data to or from an external source (say, the file system, or over a network), you’d need to use the same encoding that the other software uses, which is usually UTF-8 (and almost never UTF-32).