- cross-posted to:

- [email protected]

- [email protected]

- [email protected]

- cross-posted to:

- [email protected]

- [email protected]

- [email protected]

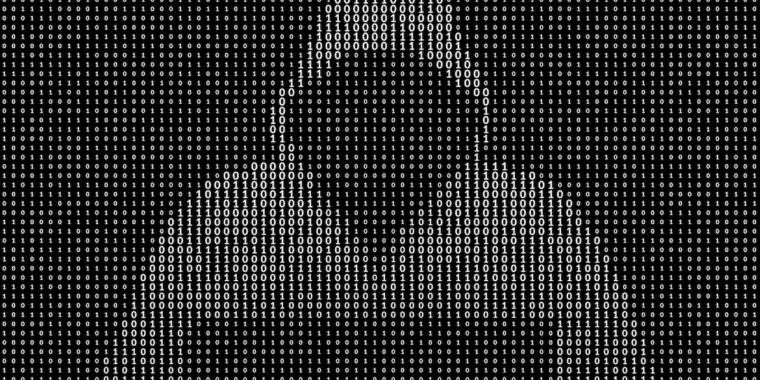

LLMs are trained to block harmful responses. Old-school images can override those rules.

You must log in or register to comment.