I don’t speak C, but isn’t this an extreme simplification of the issue? I thought memory could be abused in an almost infinite number of subtle ways outside of allocating it wrong. For example, improperly sanitized string inputs. I feel like if it were this easy, it would have been done decades ago.

Buffer overflows are far from the only way for improperly sanitized inputs to be a problem

Use after free, null pointer dereference, double free.

Solutions to these in C end up looking a lot like Rust.

Yes. If you disabled unions and pointer casts, basically no C code would compile.

I think this can be explained by underlining the differences between could, would, and should.

The blog states the fact that at least some C compilers already offer the necessary and sufficient tools that characterize “memory-safe” languages, and proceeds to illustrate examples. This isn’t new. However, just like “memory-safe” languages enforce narrow coding styles through a happy path that is expected to prevent the introduction of some classes of vulnerabilities, leveraging these compiler features in C projects also requires the same type of approach.

This isn’t new or unheard of. Some C++ frameworks are also known for supporting their own memory management and object ownership strategies, but you need to voluntarily adhere to them.

In my opinion, the article is flawed in several ways:

Many want to solve this by hard-forking the world’s system code, either by changing C/C++ into something that’s memory-safe, or rewriting everything in Rust.

We are building a convenient strawman here. The foolish unnamed “many” who wish to rewrite everything in rust shall remain unnamed. But rest assured there are many. In any case, a false dichotomy is presented: rewrite all, or enhance C/C++. In fact a reasonable compromise is possible: rust is perfectly capable of interoperating with the C languages. Large C and C++ projects such as the Linux kernel and Firefox have successfully incorporated rust into their codebase. In this way codebases may be slowly refactored, incorporating safety piecewise.

The core principle of computer-science is that we need to live with legacy, not abandon it.

Citation needed. Not abandoning working code is clearly a Good Idea™, but calling it the core principle of all computer science? I would require some further justification.

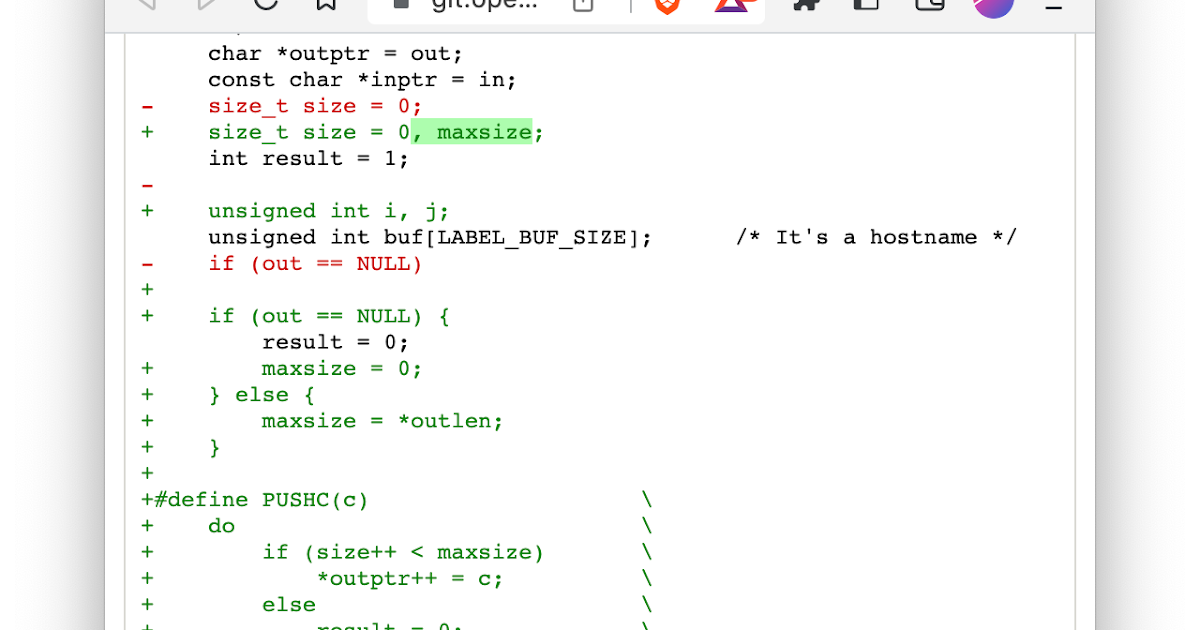

This specific feature isn’t in compilers. But gcc and clang already have other similar features. They’ve only been halfway implemented. This feature would be relatively easy to add. I’m currently studying the code to see how I can add it myself. I could just mostly copy what’s done for the alloc_size attribute. But there’s a considerable learning curve, I’d rather just persuade an existing developer of gcc or clang to add the new attributes for me.

“It would be pretty easy to make. In fact I’m already doing it. But it’s actually quite hard, so I’d rather get someone else to do it” is quite the argument.

With such features, the gap is relative small, mostly just changing function parameter lists and data structures to link a pointer with its memory-bounds. The refactoring effort would be small, rather than a major rewrite.

The argument, such as I understand it, goes like this: bounds checking is an aspect of memory safety. We can add automatic bounds checking easily to C. Once it’s there, existing C programs only require minor modifications to compile again. All other memory safety features can be added in a similar way.

It seems to me that the author underestimates the problem.

Firstly, bounds checking is indeed only one aspect. Achieving memory safety as exists in rust requires many such features to be added to C. Secondly, it is not necessarily the case that once the compiler detects unsafe code, the fix is always small. Bounds checking is a convenient case for this argument: simply add bounds checks. Refactoring code to remove e.g. data races may not be so simple. Especially so because “crash when an unsafe access is detected” is often not a desirable solution. One must refactor the code such that the unsafe conditions (and the crash) cannot occur.

Indeed, code written in rust often entirely avoids patterns that are common in C, for the simple fact that they are hard/impossible to write such that they can be proven safe by the compiler. Just because you can add the checks to the compiler doesn’t mean the rest is “easy” or “minor.”

Lastly, I’m greatly in favor of enabling C programmers to write safer code. That’s a good thing! C code is not going away soon, and they need all the help they can get. However, I believe that the idea that one can gain all the benefits rust offers with a few additions to the C compiler and some refactoring is not likely to be true. And as stated before, a language that offers the features you need is already available and can be integrated into your C project! You could consider using it for your refactoring.

In fact a reasonable compromise is possible: rust is perfectly capable of interoperating with the C languages.

I doubt you work on software for a living, because not only are you arguing a problem in a desperate need for a solution but also no one in their right mind would think it is a good idea to double the tech stacks and development environments and pipelines and everything, and with that greatly increase the cognitive load require to develop even the simplest features, just to… For what exactly? What exactly is your value proposition, and what tradeoffs, if any, you took into account?

You are free to do whatever you feel like doing in your pet projects. Rewrite them in as many languages you feel like using. In professional settings where managers have to hire people and have time and cash budgets and have to show bugs and features being finished, this sort of nonsense doesn’t fly.

You’re joking right? The person you’re replying to mentioned examples that are doing it, e.g. Firefox.

The core principle of computer-science is that we need to live with legacy, not abandon it.

The problem isn’t a principle of a computer science, but one of just safety. Also, who said this is a principle of computer science?

The problem isn’t a principle of a computer science, but one of just safety.

I think you missed the point entirely.

You can focus all you want in artificial Ivory tower scenarios, such as a hypothetical ability to rewrite everything from scratch with the latest and greatest tech stacks. Back in the real world, that is a practical impossibility in virtually all scenarios, and a renowned project killer.

In addition, the point stressed in the article is that you can add memory safety features even to C programs.

Also, who said this is a principle of computer science?

Anyone who devotes any resource learning software engineering.

Here’s a somewhat popular essay in the subject:

https://www.joelonsoftware.com/2000/04/06/things-you-should-never-do-part-i/

C compilers can’t tell you if your code has data races. That is one of the major selling points of Rust. How can the author claim that these features already exist in C compilers when they simply don’t?

Forking is a foolish idea. The core principle of computer-science is that we need to live with legacy, not abandon it.

what a crazy thing to say. The core principle of computer-science is to continue moving forward with tech, and to leave behind the stuff that doesn’t work. You don’t see people still using fortran by choice, you see them living with it because they’re completely unable to move off of it. If you’re able to abandon bad tech then the proper decision is to do so. OP keeps linking Joel, but Joel doesn’t say to not rewrite stuff, he says to not rewrite stuff for large scale commercial applications that currently work. C clearly isn’t working for a lot of memory safe applications. The logic doesn’t apply there. It also clearly doesn’t apply when you can write stuff in a memory safe language alongside existing C code without rewriting any C code at all.

And there’s no need. Modern C compilers already have the ability to be memory-safe, we just need to make minor – and compatible – changes to turn it on. Instead of a hard-fork that abandons legacy system, this would be a soft-fork that enables memory-safety for new systems.

this has nothing to do with the compiler, this has to do with writing ‘better’ code, which has proved impossible over and over again. The problem is the programmers and that’s never going to change. Using a language that doesn’t need this knowledge is the better choice 100% of the time.

C devs have been claiming ‘the language can do this, we just need to implement it’ for decades now. At this point it’s literally easier to slowly port to a better language than it is to try and ‘fix’ C/C++.

this has to do with writing ‘better’ code, which has proved impossible over and over again

I can’t speak for C, as I don’t follow it that much, but for C++, this is just not fair. It has been proven repeatedly that it can be done better, and much better. Each iteration has made so many things simpler, more productive, and also safer. Now, there are two problems with what I just said:

- That it has been done safer, that doesn’t mean that everyone makes good use of it.

- That it has been done safer, doesn’t mean that everything is fixable, and that it’s on the same level of other, newer languages.

If that last part is what you mean, fine. But the way that you phrased (and that I quoted) is just not right.

At this point it’s literally easier to slowly port to a better language than it is to try and ‘fix’ C/C++.

Surely not for everything. Of course I see great value if I can stop depending on OpenSSL, and move to a better library written in a better language. Seriously looking forward for the day when I see dynamic libraries written in Rust in my package manager. But I’d like to see what’s the plan for moving a large stack of C and C++ code, like a Linux distribution, to some “better language”. I work everyday on such a stack (e.g. KDE Neon in my case, but applicable to any other typical distro with KDE or GNOME), and deploy to customers on such a stack (on Linux embedded like Yocto). Will the D-Bus daemon be written in Rust? Perhaps. Systemd? Maybe. NetworkManager, Udisks, etc.? Who knows. All the plethora of C and C++ applications that we use everyday? Doubtful.

I can’t speak for C, as I don’t follow it that much, but for C++, this is just not fair. It has been proven repeatedly that it can be done better, and much better. Each iteration has made so many things simpler, more productive, and also safer. Now, there are two problems with what I just said:

That comment was not talking about programming languages, it was talking about human’s inability to write perfect code. Humans are unable to solve problems correctly 100% of the time. So if the language doesn’t do it for them then it will not happen. See Java for a great example of this. Java has Null Pointer Exceptions absolutely everywhere. So a bunch of different groups created annotations that would give you warnings, and even fail to compile if something was mismatched or a null check was missed. But if you miss a single

@NotNullannotation anywhere in the code, then suddenly you can get null errors again. It’s not enforced by the type system and as a result humans can forget. Kotlin came along and ‘solved it’ at the type level, where types are nullable or non-nullable. But, hilariously enough, you can still get NPEs in Kotlin because it’s commonly used to interop with Java.My point is that C/C++ can’t solve this at a fundamental level, the same way Kotlin and Java cannot solve this. Programmers are the problem, so you have to have a system that was built from the ground up to solve the problem. That’s what we are getting in modern day languages. You can’t just tack the system on after the fact, unless it completely removes any need for the programmer to do literally anything, because the programmer is the problem.

Surely not for everything. Of course I see great value if I can stop depending on OpenSSL, and move to a better library written in a better language. Seriously looking forward for the day when I see dynamic libraries written in Rust in my package manager. But I’d like to see what’s the plan for moving a large stack of C and C++ code, like a Linux distribution, to some “better language”. I work everyday on such a stack (e.g. KDE Neon in my case, but applicable to any other typical distro with KDE or GNOME), and deploy to customers on such a stack (on Linux embedded like Yocto). Will the D-Bus daemon be written in Rust? Perhaps. Systemd? Maybe. NetworkManager, Udisks, etc.? Who knows. All the plethora of C and C++ applications that we use everyday? Doubtful.

I’m not talking about whole scale rewrites. I’m talking about what Linux is already doing with writing new code in Rust, or small portions of performance critical code in a memory safe language. I’m not talking about like what Fish Shell did and rewrote the whole codebase in one go, because that’s not realistic. But slowly converting an entire codebase over? That’s incredibly realistic. I’ve done so with several 250k+ line Java codebases, converting them to Kotlin. When languages are built to be easy to move to (Rust, Kotlin, etc), then migrating to them slowly over time where it matters is easily attainable.

The core principle of computer-science is to continue moving forward with tech, and to leave behind the stuff that doesn’t work.

I’m not sure you realize you’re proving OP’s point.

Rewriting projects from scratch by definition represent big step backwards because you’re wasting resources to deliver barely working projects that have a fraction of the features that the legacy production code already delivered and reached a stable state.

And you are yet to present a single upside, if there is even one.

At this point it’s literally easier to slowly port to a better language than it is to try and ‘fix’ C/C++.

You are just showing the world you failed to read the article.

Also, it’s telling that you opt to frame the problem as "a project is written in C instead of <insert pet language> instead of actually secure and harden existing projects.

And you are yet to present a single upside, if there is even one.

This is a flippant statement, honestly, as it disregards the premise of the discussion. It’s memory safety. That’s the upside. The author even linked to memory safety bugs in OpenSSL. They might still exist elsewhere. (I realize there is a narrow class of memory bugs that C compilers understand, but it’s just that, a narrow class). We have scant way of knowing whether or not they exist without significant testing effort that is not likely to happen. And it would be fighting a losing battle anyway, because someone is writing new C code to maintain these legacy systems.

This is a flippant statement, honestly, as it disregards the premise of the discussion. It’s memory safety.

You’re completely ignoring even the argument you’re supposedly commenting,let alone the point it makes. You’re parroting cliches while purposely turning a blind eye to the point made in the blog that yes C can be memory safe. Likewise, Rust also has CVEs due to memory safety bugs. So, beyond these cliches, what exactly are you trying to argue?

Rewriting projects from scratch by definition represent big step backwards because you’re wasting resources to deliver barely working projects that have a fraction of the features that the legacy production code already delivered and reached a stable state.

Joel’s point was about commercial products not programming languages. I’m not the one misunderstanding here. When people talk about using Rust, it’s not talking about rewriting every single thing ever written in C/C++. It’s about leaving C/C++ behind and moving on to something that doesn’t have the issues of the past. This is not about large scale commercial rewrites. It’s about C’s inability to deal with these problems.

You are just showing the world you failed to read the article.

sure thing bud.

Also, it’s telling that you opt to frame the problem as "a project is written in C instead of <insert pet language> instead of actually secure and harden existing projects.

I didn’t say that and you know it. Also it’s quite telling (ooh, I can say the same things you can) that you think “better language” means “pet language”. Actually laughable.

The C dev doth protest too much, methinks.

I’m a C dev and I don’t care if C ever go extinct, but the MCU toolchains are pretty much always C/C++.

If it was for a personal project, I wouldn’t mind setting up a Rust compiler, but in a work setting, the uncertainty of unofficial crates (edit: I don’t sleep enough) is a no go for a product that needs to be maintained for years to come.

And even when an official toolchain will be made, it will take a moment to spread.

But I really like all the features of newer language over C, so if I have the opportunity to change language, I will take it.

I’m merely commenting on the fact that every time this comes up, it’s just a chant of “skill issue” over and over again. The problem is that it’s hard to do it correctly, and there are so many more ways to do it incorrectly.

I know what you mean. I’ve been doing higher level development for a couple decades and only now really getting into embedded stuff the past year or two. I dislike a lot of what C makes necessary when dealing with memory and controlling interrupts to avoid data races.

I see rust officially supported on newer ARM Cortex processors and that sounds like it would be an awesome environment. But I’m not about to stake these projects with a hobbyist library for the 8-bit AVR processors I’m actually having to use.

Unfortunately I just have to suck it up and understand how the ECU works at the processor/instruction level and it’s fine until there are better tools (or I get to use better processors).

ETA: I’ve thought also that most of the avr headers are just register definitions and simple macros, maybe it wouldn’t be so bad to convert them to rust myself? But then it’s my library that’s probably broken lol

Humans can be immortal

Don’t believe me? I went to my local old folks home and found some who are older than 50!!