This is pretty interesting:

The results highlight significant differences in browser security: while Google Chrome and Samsung Internet exhibited lower threat indices, Mozilla Firefox demonstrated consistently higher scores, indicating greater exposure to risks. These observations a slightly contradict widespread opinion.

I’m not anywhere near qualified to comment on their methodology. This is a full blown whitepaper… but I’m going to run my mouth anyway!

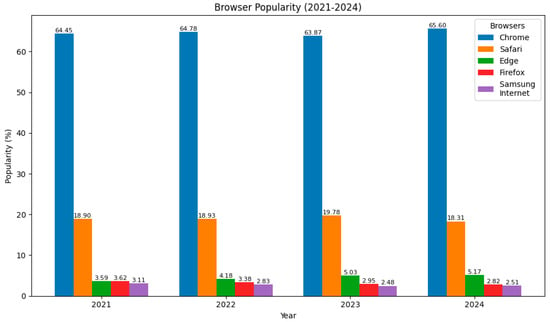

It seems like a significant factor for their assessment of how “bad” the situation is involves weighting against the browser popularity. They seem to have an assumption that a higher userbase would naturally mean more CVE discovery/disclosure, so their formula seems to have weighting such that each individual CVE for Chrome is worth less than each individual CVE for Firefox.

I personally think that while it is admirable they have tried to account for this mathematically, it’s trying to assume a hard statistical link between popularity and CVE amount where only a “loose” correlation may exist.

Chrome, by the paper’s admission, has more actual CVEs over the same period of time as Firefox, but with their complex weighting formula they argue that each one means less than Firefox’s CVEs.