- cross-posted to:

- [email protected]

- cross-posted to:

- [email protected]

Silicon Valley is bullish on AI agents. OpenAI CEO Sam Altman said agents will “join the workforce” this year. Microsoft CEO Satya Nadella predicted that agents will replace certain knowledge work. Salesforce CEO Marc Benioff said that Salesforce’s goal is to be “the number one provider of digital labor in the world” via the company’s various “agentic” services.

But no one can seem to agree on what an AI agent is, exactly.

In the last few years, the tech industry has boldly proclaimed that AI “agents” — the latest buzzword — are going to change everything. In the same way that AI chatbots like OpenAI’s ChatGPT gave us new ways to surface information, agents will fundamentally change how we approach work, claim CEOs like Altman and Nadella.

That may be true. But it also depends on how one defines “agents,” which is no easy task. Much like other AI-related jargon (e.g. “multimodal,” “AGI,” and “AI” itself), the terms “agent” and “agentic” are becoming diluted to the point of meaninglessness.

AI agents are only software assistants that can monitor their environment, make intelligent decisions, and take actions to achieve pre-defined goals. https://transcriptly.org/youtube-transcript-generator?videoId=QEJpZjg8GuA&language=en

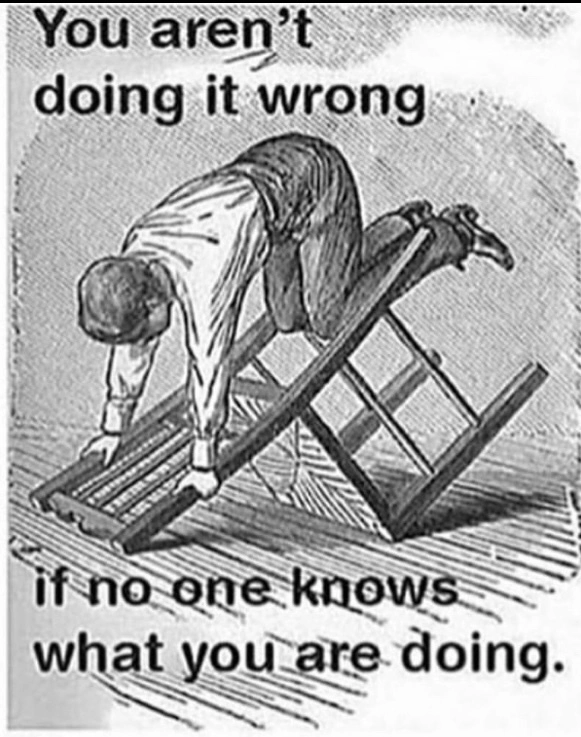

You can’t fail at anything if your goals aren’t clearly stated.

I had a similar thought earlier.

This clearly is an AI agent!

They keep pretending autocorrect bots will replace knowledge work but they still can’t overcome the nature of generative transformers. They’re still just lying.

What we ought to be discussing is what’s to be done with the liars.

Not buying their shit, hopefully.

Etymologically “agent” is just a fancy borrowed synonym for “doer”. So an AI agent is an AI that does. Yup, it’s that vague.

You could instead restrict the definition further, and say that an AI agent does things autonomously. Then the concept is mutually exclusive with “assistant”, as the assistant does nothing on its own, it’s only there to assist someone else. And yet look at what Pathak said - that she understood both things to be interchangeable.

…so might as well say that “agent” is simply the next buzzword, since people aren’t so excited with the concept of artificial intelligence any more. They’ve used those dumb text gens, gave them either a six-fingered thumbs up or thumbs down, but they’re generally aware that it doesn’t do a fraction of what they believed to.

…so might as well say that “agent” is simply the next buzzword, since people aren’t so excited with the concept of artificial intelligence any more

This is exactly the reason for the emphasis on it.

The reality is that the LLMs are impressive and nice to play with. But investors want to know where the big money will come from, and for companies, LLMs aren’t that useful in their current state, I think one of the biggest use for them is extracting information from documents with lots of text.

So “agents” are supposed to be LLMs executing actions instead of just outputting text (such as calling APIs). Which doesn’t seem like the best idea considering they’re not great at all at making decisions—despite these companies try to paint them as capable of such.

oh, this one’s pretty easy, actually

a normal AI tells you it’s safe to eat one rock per day

an AI agent waits for you to open your mouth, and then throws a rock at your face. but it’s smart enough to only do that once a day.

Casey Newton reviewed OpenAI’s “agent” back in January

he called it “promising but frustrating”…but this is the type of shit he considers “promising”:

My most frustrating experience with Operator was my first one: trying to order groceries. “Help me buy groceries on Instacart,” I said, expecting it to ask me some basic questions. Where do I live? What store do I usually buy groceries from? What kinds of groceries do I want?

It didn’t ask me any of that. Instead, Operator opened Instacart in the browser tab and begin searching for milk in grocery stores located in Des Moines, Iowa.

At that point, I told Operator to buy groceries from my local grocery store in San Francisco. Operator then tried to enter my local grocery store’s address as my delivery address.

After a surreal exchange in which I tried to explain how to use a computer to a computer, Operator asked for help. “It seems the location is still set to Des Moines, and I wasn’t able to access the store,” it told me. “Do you have any specific suggestions or preferences for setting the location to San Francisco to find the store?”

they’re gonna revolutionize the world, it’s gonna evolve into AGI Real Soon Now…but also if you live in San Francisco and tell it to buy you groceries it’ll order them from Iowa.

Look, man, it’s trying its best. And frankly, I think it’s about as ready as it could ever get to replace every single billionaire in the world, considering the sanity of many billionaire’s choices we hear about lately.

So by agent, they mean the same AI but they’re doing all the things you shouldn’t with it? Great…

Secret Agent AI, Secret Agent AI

They’ve given you a name, and taken away your number.

idk im sick of ai rnow, everytime I start to like it a bit I get so many bugs that I end up doing 3x as much mental work as I wouldve if I has just learned it myself done it, at least 3x sometimes 20x becsuse in the end I do learn it myself and can see the difference in time lol

Just stubborn and really want it to work, feels like it has the answer and im just asking the right question (because that is also true sometimes, a little bit more context and it works, im over it, its like a game, not a tool)