- cross-posted to:

- [email protected]

- demicrosoft

- cross-posted to:

- [email protected]

- demicrosoft

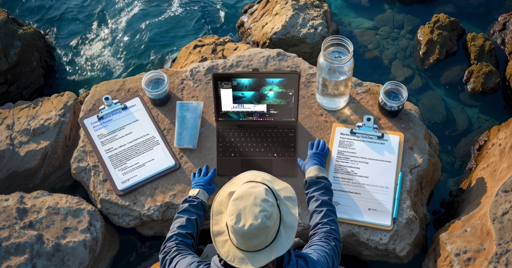

This shot was probably made by AI — the clipboards and Mason jar are good tells. | Image: Microsoft

This shot was probably made by AI — the clipboards and Mason jar are good tells. | Image: Microsoft

Microsoft has revealed that it’s created a minute-long advert for its Surface Pro and Surface Laptop hardware using generative AI. But there’s a twist: it released the ad almost three months ago, and no-one seemed to notice the AI elements.

The ad, which went live on YouTube on January 30th, isn’t entirely made up of generated content. In a Microsoft Design blog post published Wednesday, senior design communications manager Jay Tan admits that “the occasional AI hallucination would rear its head,” meaning the creators had to correct some of the AI output and integrate it with real footage.

“When deciding on which shots within the ad were to be AI generated, the team determined that any intricate movement such as closeups of hands typing on keyboards had to be shot live,” Tan says. “Shots that were quick cuts or with limited motion, however, were prime for co-creation with generative AI tools.”

Microsoft hasn’t specified exactly which shots were generated using AI, though Tan did detail the process. AI tools were first used to generate “a compelling script, storyboards and a pitch deck.” Microsoft’s team then used a combination of written prompts and sample images to get a chatbot to generate text prompts that could be fed into image generators. Those images were iterated on further, edited to correct hallucinations and other errors, and then fed into video generators like Hailuo or Kling. Those are the only specific AI tools named by Tan, with the chatbots and image generators unspecified.

“We probably went through thousands of different prompts, chiseling away at the output little by little until we got what we wanted. There’s never really a one-and-done prompt,” says creative director Cisco McCarthy. “It comes from being relentless.” That makes the process sound like more work than it might have been otherwise, but visual designer Brian Townsend estimates that the team “probably saved 90% of the time and cost it would typically take.”

The process echoes recent comments from Microsoft’s design chief Jon Friedman to my colleague Tom Warren, that AI is going to become one more tool in creatives’ arsenals, rather than replacing them outright. As Friedman puts it, “suddenly the design job is how do you edit?”

Despite the fact that the video has been online for almost three months, there’s little sign that anyone noticed the AI output until now. The ad has a little over 40,000 views on YouTube at the time of writing, and none of the top comments speculate that the video was produced using AI.

Knowing that AI was involved, it’s easy enough to guess where — shots of meeting notes that clearly weren’t hand-written, a Mason jar that’s suspiciously large, the telling AI sheen to it all — but without knowing to look for it, it’s clear that plenty of viewers couldn’t spot the difference. The ad’s quick cuts help hide the AI output’s flaws, but suggest that in the right hands, AI tools are now powerful enough to go unnoticed.

From The Verge via this RSS feed

Microsoft made an ad

with generative AIand nobody noticed.The ad has a little over 40,000 views on YouTube

Why would people look up an ad? I’m amazed it got that many views at all.

Guess if your goal is to provide a bunch of .5s shots then AI is for you

They’re like 3 frame long shots, and the only consistent part (meaning the part you look at) is the laptop, which isn’t AI generated. So yeah, if you want visual noise to hint at different places that’s short enough that your brain can’t actually register it, AI slop will do.

Disruptive change always starts at the bottom.

Electric steel mills weren’t good for anything but rebar. Integrated mills liked that: no more time wasted making scrap. Then electric mills got better, and integrated mills were only getting high-quality jobs, with fat profit margins. Integrated mills loved that… until their expensive jobs for dwindling customers couldn’t pay the bills. The new method became good enough.

CGI wasn’t good for anything but plastic. Animators considered it a pre-visualization tool for drawing complex perspectives - like the ballroom scene in Beauty & The Beast. Pixar dodged the problem by making a film about plastic. It quickly absolved 2D animators of tedious fiddly metal parts, so they could focus on animals and people. Then Monsters Inc had really good fur. And that same year, Final Fantasy: The Spirits Within didn’t exactly nail photorealistic people, but they fuckin’ tried. Disney eventually gave up on 2D animation. Warner did too, and apparently produced a couple Looney Toons movies by accident. CGI is good enough.

AI video isn’t good for much besides stock footage. Well. Not good for much you could televise, besides stock footage. Film nerds and animators alike rightly mock the examples, because nobody using this seems to have an artistic bone in their body. But the tech is getting better. ‘Why is wax-dummy Will Smith eating a table made of ramen’ has become ‘that convincing actor seems poorly motivated.’ That’s going to shift instantly when people stop putting in text alone. This nonsense already performs witchcraft going image-to-image, and video-to-video is getting there. Generating from labels and noise is a stupid tech demo gone feral. Have someone act out what you want, and let the robot change who they are and where they are. It’s not like pointing a camera at people is expensive. AI video will be good enough when no-budget fan films with decent pacing can be “denoised” of the parts that look fake. The robot can make your garage-built Star Trek bridge blink and gleam, or turn your back yard into an alien planet. Don’t just ask it for a new TOS episode and sit on your thumbs. New tools make more things possible. You still have to put in the work.