Funny that they’re calling them AI haters when they’re specifically poisoning AI that ignores the do not enter sign. FAFO.

First Albatross, First Out

Fluffy Animal’s Fecal Orifice.

Fair As Fuck Ok?

Sheesh people, it’s “fuck around and find out”. Probably more appropriate in the leopards eating face context but this works enough.

What are you talking about? FAFO obviously stands for “fill asshole full of”. Like FAFO dicks. Or FAFO pennies.

I’m glad you’re here to tell us these things!

AI is the “most aggressive” example of “technologies that are not done ‘for us’ but ‘to us.’”

Well said.

Deployment of Nepenthes and also Anubis (both described as “the nuclear option”) are not hate. It’s self-defense against pure selfish evil, projects are being sucked dry and some like ScummVM could only freakin’ survive thanks to these tools.

Those AI companies and data scrapers/broker companies shall perish, and whoever wrote this headline at arstechnica shall step on Lego each morning for the next 6 months.

Feels good to be on an instance with Anubis

one of the united Nations websites deployed Anubis

Do you have a link to a story of what happened to ScummVM? I love that project and I’d be really upset if it was lost!

Thank you!

Thanks, interesting and brief read!

Very cool, and the mascot is cute too as a nice bonus.

Wait what? I am uninformed, can you elaborate on the ScummVM thing? Or link an article?

From the Fabulous Systems (ScummVM’s sysadmin) blog post linked by Natanox:

About three weeks ago, I started receiving monitoring notifications indicating an increased load on the MariaDB server.

This went on for a couple of days without seriously impacting our server or accessibility–it was a tad slower than usual.

And then the website went down.

Now, it was time to find out what was going on. Hoping that it was just one single IP trying to annoy us, I opened the access log of the day

there were many IPs–around 35.000, to be precise–from residential networks all over the world. At this scale, it makes no sense to even consider blocking individual IPs, subnets, or entire networks. Due to the open nature of the project, geo-blocking isn’t an option either.

The main problem is time. The URLs accessed in the attack are the most expensive ones the wiki offers since they heavily depend on the database and are highly dynamic, requiring some processing time in PHP. This is the worst-case scenario since it throws the server into a death spiral.

First, the database starts to lag or even refuse new connections. This, combined with the steadily increasing server load, leads to slower PHP execution.

At this point, the website dies. Restarting the stack immediately solves the problem for a couple of minutes at best until the server starves again.

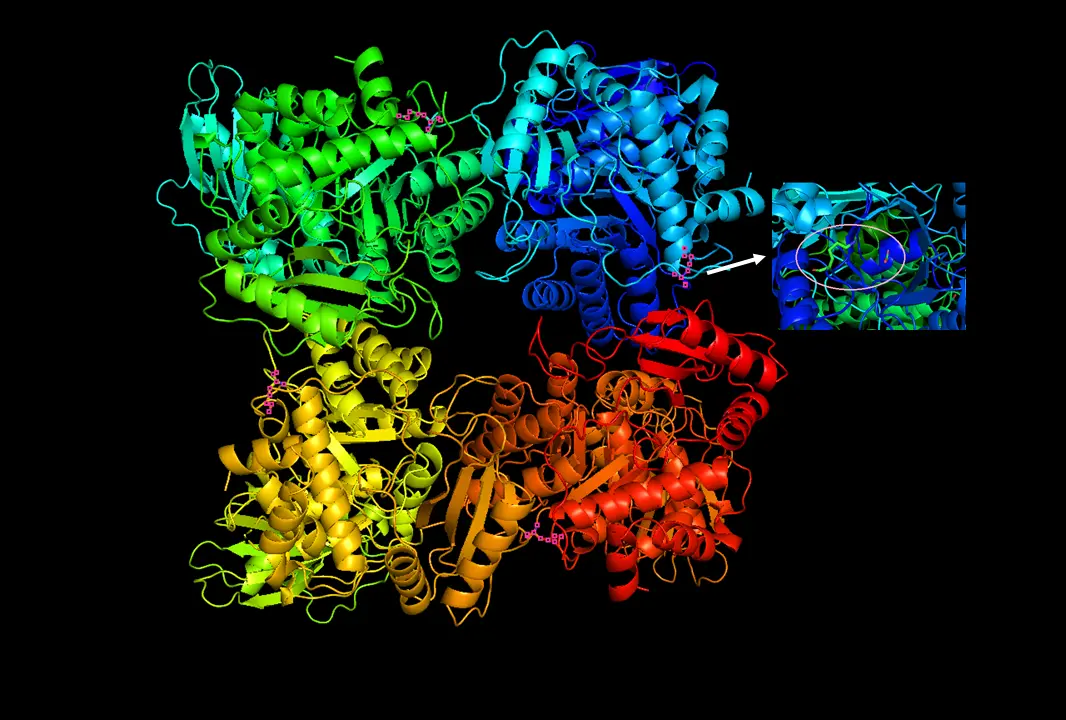

Anubis is a program that checks incoming connections, processes them, and only forwards “good” connections to the web application. To do so, Anubis sits between the server or proxy responsible for accepting HTTP/HTTPS and the server that provides the application.

Many bots disguise themselves as standard browsers to circumvent filtering based on the user agent. So, if something claims to be a browser, it should behave like one, right? To verify this, Anubis presents a proof-of-work challenge that the browser needs to solve. If the challenge passes, it forwards the incoming request to the web application protected by Anubis; otherwise, the request is denied.

As a regular user, all you’ll notice is a loading screen when accessing the website. As an attacker with stupid bots, you’ll never get through. As an attacker with clever bots, you’ll end up exhausting your own resources. As an AI company trying to scrape the website, you’ll quickly notice that CPU time can be expensive if used on a large scale.

I didn’t get a single notification afterward. The server load has never been lower. The attack itself is still ongoing at the time of writing this article. To me, Anubis is not only a blocker for AI scrapers. Anubis is a DDoS protection.

I love that one is named Nepenthes.

It’s so sad we’re burning coal and oil to generate heat and electricity for dumb shit like this.

Wait till you realize this project’s purpose IS to force AI to waste even more resources.

deleted by creator

Here’s a thing - it’s not useless.

What use does an AI get out of scraping pages designed to confuse and mislead it?

Punishment for being stupid & greedy.

That’s war. That has been the nature of war and deterrence policy ever since industrial manufacture has escalated both the scale of deployments and the cost and destructive power of weaponry. Make it too expensive for the other side to continue fighting (or, in the case of deterrence, to even attack in the first place). If the payoff for scraping no longer justifies the investment of power and processing time, maybe the smaller ones will give up and leave you in peace.

Always say please and thank you to your friendly neighbourhood LLM!

deleted by creator

Governments are full of two types: (1) the stupid, and (2) the self-interested. The former doesn’t understand technology, and the latter doesn’t fucking care.

Of course “governments” dropped the ball on regulating AI.

Of all the things governments should regulate, this is probably the least important and ineffective one.

Why?

-

super hard to tell where electricity for certain computing task is coming from. What if I use 100% renewable for ai training offsetting it by using super cheap dirty electricity for other tasks

-

who will audit what electricity is used for anyway? Any computer will have an government sealed rootkit?

-

offshore

-

a million problems that require more attention, from migration, to Healthcare, to economy

-

deleted by creator

I kinda feel like we’re 75% of the way there already, and we gotta be hitting with everything we’ve got if we’re to stand a chance against it…

That’s already happening, how do you want the government to legislate against Russian, Chinese or American actors?

This gives me a little hope.

I mean, we contemplate communism, fascism, this, that, and another. When really, it’s just collective trauma and reactionary behavior, because of the lack of self-awareness and in the world around us. So this could just be synthesized as human stupidity. We’re killing ourselves because we’re too stupid to live.

Dumbest sentiment I read in a while. People, even kids, are pretty much aware of what’s happening (remember Fridays for Future?), but the rich have coopted the power apparatus and they are not letting anyone get in their way of destroying the planet to become a little richer.

Unclear how AI companies destroying the planet’s resources and habitability has any relation to a political philosophy seated in trauma and ignorance except maybe the greed of a capitalist CEO’s whimsy.

The fact that the powerful are willing to destroy the planet for momentary gain bears no reflection on the intelligence or awareness of the meek.

Fucking nihilists

You are, and not the rest of us

what the fuck does this even mean

This might explain why newer AI models are going nuts. Good jorb 👍

It absolutely doesn’t. The only model that has “gone nuts” is Grok, and that’s because of malicious code pushed specifically for the purpose of spreading propaganda.

what models are going nuts?

Not sure if OP can provide sources, but it makes sense kinda? Like AI has been trained on just about every human creation to get it this far, what happens when the only new training data is AI slop?

AI being trained by AI is how you train most models. Man, people here are ridiculously ignorant…

They specifically said “slop”. Maybe you breezed straight past that word in your fury.

Fury? I mean the only slop here are lemmings.

Nice try.

Claude version 4, the openAi mini models, not sure what else

The ars technica article: AI haters build tarpits to trap and trick AI scrapers that ignore robots.txt

AI tarpit 1: Nepenthes

AI tarpit 2: Iocaine

Thank you!!

thanks for the links. the more I read of this the more based it is

Nice … I look forward to the next generation of AI counter counter measures that will make the internet an even more unbearable mess in order to funnel as much money and control to a small set of idiots that think they can become masters of the universe and own every single penny on the planet.

All the while as we roast to death because all of this will take more resources than the entire energy output of a medium sized country.

deleted by creator

It is already!

I will cite the scientific article later when I find it, but essentially you’re wrong.

water != energy, but i’m actually here for the science if you happen to find it.

It can in the sense that many forms of generating power are just some form of water or steam turbine, but that’s neither here nor there.

IMO, the graph is misleading anyway because the criticism of AI from that perspective was the data centers and companies using water for cooling and energy, not individuals using water on an individual prompt. I mean, Microsoft has entered a deal with a power company to restart one of the nuclear reactors on Three Mile Island in order to compensate for the expected cost in energy of their AI. Using their service is bad because it incentivizes their use of so much energy/resources.

It’s like how during COVID the world massively reduced the individual usage of cars for a year and emissions barely budged. Because a single one of the largest freight ships puts out more emissions than every personal car combined annually.

This particular graph is because a lot of people freaked out over “AI draining oceans” that’s why the original paper (I’ll look for it when I have time, I have a exam tomorrow. Fucking higher ed man) made this graph

Asking ChatGPT a question doesn’t take 1 hour like most of these… this is a very misleading graph

This is actually misleading in the other direction: ChatGPT is a particularly intensive model. You can run a GPT-4o class model on a consumer mid to high end GPU which would then use something in the ballpark of gaming in terms of environmental impact.

You can also run a cluster of 3090s or 4090s to train the model, which is what people do actually, in which case it’s still in the same range as gaming. (And more productive than 8 hours of WoW grind while chugging a warmed up Nutella glass as a drink).

Models like Google’s Gemma (NOT Gemini these are two completely different things) are insanely power efficient.

I didn’t even say which direction it was misleading, it’s just not really a valid comparison to compare a single invocation of an LLM with an unrelated continuous task.

You’re comparing Volume of Water with Flow Rate. Or if this was power, you’d be comparing Energy (Joules or kWh) with Power (Watts)

Maybe comparing asking ChatGPT a question to doing a Google search (before their AI results) would actually make sense. I’d also dispute those “downloading a file” and other bandwidth related numbers. Network transfers are insanely optimized at this point.

I can’t really provide any further insight without finding the damn paper again (academia is cooked) but Inference is famously low-cost, this is basically “average user damage to the environment” comparison, so for example if a user chats with ChatGPT they gobble less water comparatively than downloading 4K porn (at least according to this particular paper)

As with any science, statistics are varied and to actually analyze this with rigor we’d need to sit down and really go down deep and hard on the data. Which is more than I intended when I made a passing comment lol

What about training an AI?

According to https://arxiv.org/abs/2405.21015

The absolute most monstrous, energy guzzling model tested needed 10 MW of power to train.

Most models need less than that, and non-frontier models can even be trained on gaming hardware with comparatively little energy consumption.

That paper by the way says there is a 2.4x increase YoY for model training compute, BUT that paper doesn’t mention DeepSeek, which rocked the western AI world with comparatively little training cost (2.7 M GPU Hours in total)

Some companies offset their model training environmental damage with renewable and whatever bullshit, so the actual daily usage cost is more important than the huge cost at the start (Drop by drop is an ocean formed - Persian proverb)

we’re rolling out renewables at like 100x the rate of ai electricity use, so no need to worry there

Yeah, at this rate we’ll be just fine. (As long as this is still the Reagan administration.)

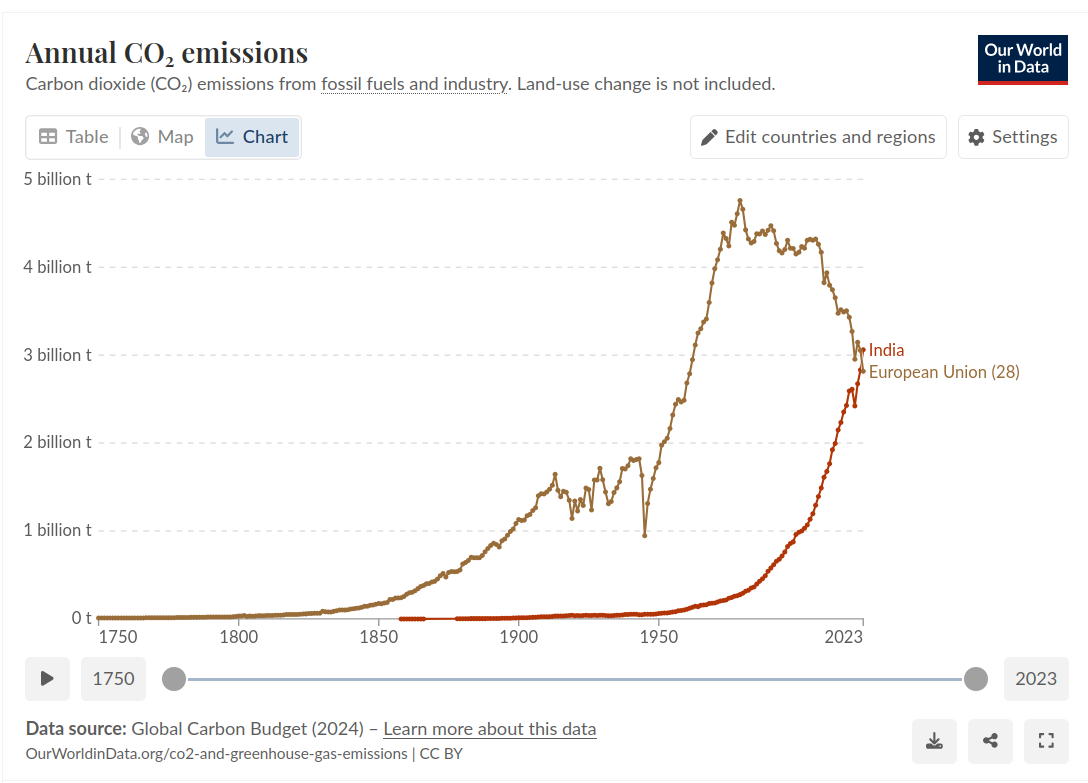

yep the biggest worry isn’t AI, it’s India

https://www.worldometers.info/co2-emissions/india-co2-emissions/

The west is lowering its co2 output while India is slurping up all the co2 we’re saving:

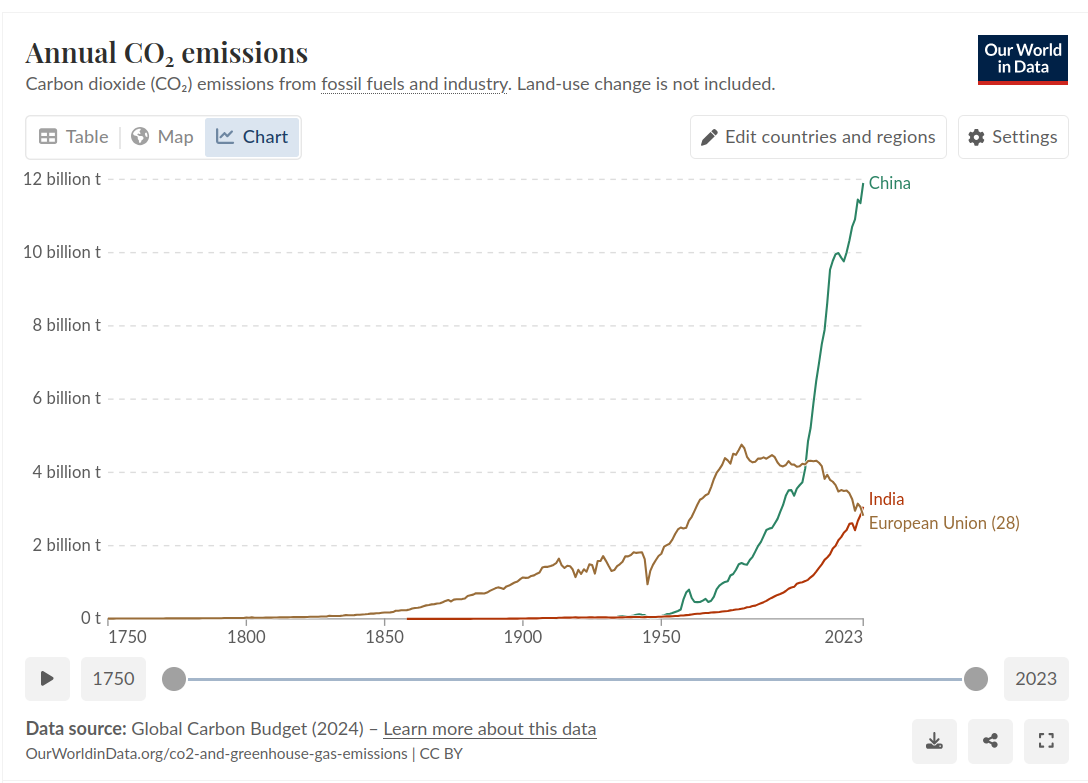

This doesn’t include China of course, the most egregious of the co2 emitters

AI is not even a tiny blip on that radar, especially as AI is in data centres and devices which runs on electricity so the more your country goes to renewables the less co2 impacting it is over time

Could you add the US to the graphs, as EU and West are hardly synonymous - even as it descends into Trumpgardia.

China has that massive rate because it manufactures for the US, the US itself is a huge polluter for military and luxury NOT manufacturing

Still the second largest CO2 emitter, so it’d make sense to put it on for the comparison.

Now break that shit down per capita, and also try and account for the fact that China is a huge manufacturing hub for the entire world’s consumption, you jackass.

India has extremely low historical co2 output, crakkker

I’ve been thinking about this for a while. Consider how quick LLM’s are.

If the amount of energy spent powering your device (without an LLM), is more than using an LLM, then it’s probably saving energy.

In all honesty, I’ve probably saved over 50 hours or more since I started using it about 2 months ago.

Coding has become incredibly efficient, and I’m not suffering through search-engine hell any more.

Edit:

Lemmy when someone uses AI to get a cheap, fast answer: “Noooo, it’s killing the planet!”

Lemmy when someone uses a nuclear reactor to run Doom: Dark Ages on a $20,000 RGB space heater: “Based”

Are you using your PC less hours per day?

Yep, more time for doing home renovations.

Just writing code uses almost no energy. Your PC should be clocking down when you’re not doing anything. 1GHz is plenty for text editing.

Does ChatGPT (or whatever LLM you use) reduce the number of times you hit build? Because that’s where all the electricity goes.

Except that half the time I dont know what the fuck I’m doing. It’s normal for me to spend hours trying to figure out why a small config file isnt working.

That’s not just text editing, that’s browsing the internet, referring to YouTube videos, or wallowing in self-pity.

That was before I started using gpt.

It sounds like it does save you a lot of time then. I haven’t had the same experience, but I did all my learning to program before LLMs.

Personally I think the amount of power saved here is negligible, but it would actually be an interesting study to see just how much it is. It may or may not offset the power usage of the LLM, depending on how many questions you end up asking and such.

It doesn’t always get the answers right, and I have to re-feed its broken instructions back into itself to get the right scripts, but for someone with no official coding training, this saves me so much damn time.

Consider I’m juggling learning Linux starting from 4 years ago, along with python, rust, nixos, bash scripts, yaml scripts, etc.

It’s a LOT.

For what it’s worth, I dont just take the scripts and paste them in, I’m always trying to understand what the code does, so I can be less reliant as time goes on.

What kind of code are you writing that your CPU goes to sleep? If you follow any good practices like TDD, atomic commits, etc, and your code base is larger than hello world, your PC will be running at its peak quite a lot.

Example: linting on every commit + TDD. You’ll be making loads of commits every day, linting a decent code base will definitely push your CPU to 100% for a few seconds. Running tests, even with caches, will push CPU to 100% for a few minutes. Plus compilation for running the app, some apps take hours to compile.

In general, text editing is a small part of the developer workflow. Only junior devs spend a lot of time typing stuff.

Anything that’s per-commit is part of the “build” in my opinion.

But if you’re running a language server and have stuff like format-on-save enabled, it’s going to use a lot more power as you’re coding.

But like you said, text editing is a small part of the workflow, and looking up docs and browsing code should barely require any CPU, a phone can do it with fractions of a Watt, and a PC should be underclocking when the CPU is underused.

What do you mean “build”? It’s part of the development process.

We’re racing towards the Blackwall from Cyberpunk 2077…

Already there. The blackwall is AI-powered and Markov chains are most definitely an AI technique.

I’m so happy to see that ai poison is a thing

Don’t be too happy. For every such attempt there are countless highly technical papers on how to filter out the poisoning, and they are very effective. As the other commenter said, this is an arms race.

So we should just give up? Surely you don’t mean that.

I don’t think they meant that. Probably more like

“Don’t upload all your precious data carelessly thinking it’s un-stealable just because of this one countermeasure.”

Which of course, really sucks for artists.

I suppose this will become an arms race, just like with ad-blockers and ad-blocker detection/circumvention measures.

There will be solutions for scraper-blockers/traps. Then those become more sophisticated. Then the scrapers become better again and so on.I don’t really see an end to this madness. Such a huge waste of resources.

there is an end: you legislate it out of existence. unfortunately the US politicians instead are trying to outlaw any regulations regarding AI instead. I’m sure it’s not about the money.

the rise of LLM companies scraping internet is also, I noticed, the moment YouTube is going harsher against adblockers or 3rd party viewer.

Piped or Invidious instances that I used to use are no longer works, did so may other instances. NewPipe have been broken more frequently. youtube-dl or yt-dlp sometimes cannot fetch higher resolution video. and so sometimes the main youtube side is broken on Firefox with ublock origin.

Not just youtube but also z-library, and especially sci-hub & libgen also have been harder to use sometimes.

Well, the adblockers are still wining, even on twitch where the ads como from the same pipeline as the stream, people made solutions that still block them since ublock origin couldn’t by itself.

What do you use to block twitch ads? With UBO I still get the occasional ad marathon

https://github.com/pixeltris/TwitchAdSolutions

I use the video swap one.

Madness is right. If only we didn’t have to create these things to generate dollar.

I feel like the down-vote squad misunderstood you here.

I think I agree: If people made software they actually wanted , for human people , and less for the incentive of “easiest way to automate generation of dollarinos.” I think we’d see a lot less sophistication and effort being put into such stupid things.

These things are made by the greedy, or by employees of the greedy. Not everyone working on this stuff is an exploited wagie, but also this nonsense-ware is where “market demand” currently is.

Ever since the Internet put on a suit and tie and everything became abou real-life money-sploitz, even malware is boring anymore.

New dangerous exploit? 99% chance it’s just another twist on a crypto-miner or ransomware.

Could you imagine a world where word of mouth became the norm again? Your friends would tell you about websites, and those sites would never show on search results because crawlers get stuck.

No they wouldn’t. I’m guessing you’re not old enough to remember a time before search engines. The public web dies without crawling. Corporations will own it all you’ll never hear about anything other than amazon or Walmart dot com again.

Nope. That isn’t how it worked. You joined message boards that had lists of web links. There were still search engines, but they were pretty localized. Google was also amazing when their slogan was “don’t be evil” and they meant it.

I was there. People carried physical notepads with URLs, shared them on BBS’, or other forums. It was wild.

There was also “circle banners” of websites that would link to each others… And then off course “stumble upon”…

Yes! Web rings!

I forgot web rings! Also the crazy all centered Geocities websites people made. The internet was an amazing place before the major corporations figured it out.

No. Only very selective people joined message boards. The rest were on AOL, compact or not at all. You’re taking a very select group of.people and expecting the Facebook and iPad generations to be able to do that. Not going to happen. I also noticed some people below talking about things like geocities and other minor free hosting and publishing site that are all gone now. They’re not coming back.

Yep, those things were so rarely used … sure. You are forgetting that 99% of people knew nothing about computers when this stuff came out, but people made themselves learn. It’s like comparing Reddit and Twitter to a federated alternative.

Also, something like geocities could easily make a comeback if the damn corporations would stop throwing dozens of pop-ups, banners, and sidescrolls on everything.

And 99% of people today STILL don’t know anything about computers. Go ask those same people simply “what is a file” they won’t know. Lmao. Geocities could come back if corporations stop advertising. Do you even hear yourself.

That would be terrible, I have friends but they mostly send uninteresting stuff.

Fine then, more cat pictures for me.

Better yet. Share links to tarpits with your non-friends and enemies

It’d be fucking awful - I’m a grown ass adult and I don’t have time to sit in IRC/fuck around on BBS again just to figure out where to download something.

There used to be 3 or 4 brands of, say, lawnmowers. Word of mouth told us what quality order them fell in. Everyone knew these things and there were only a few Ford Vs. Chevy sort of debates.

Bought a corded leaf blower at the thrift today. 3 brands I recognized, same price, had no idea what to get. And if I had had the opportunity to ask friends or even research online, I’d probably have walked away more confused. For example; One was a Craftsman. “Before, after or in-between them going to shit?”

Got off topic into real-world goods. Anyway, here’s my word-of-mouth for today: Free, online Photoshop. If I had money to blow, I’d drop the $5/mo. for the “premium” service just to encourage them. (No, you’re not missing a thing using it free.)

Removed by mod

How do you know that’s a bot please? Is it specifically a hot advertising that online photos hop equivalent? Is it a real software or scam? The whole approach is intriguing to me

Edit: I Will assume honesty in this instance. It’s because they’re advertising something in a very particular tone, to match what some Amerikaanse consider common language.

Normal people don’t do that.

I guess this is marketing. But … Why would you use anything besides GIMP?

After a Decade of Waiting, GIMP 3.0.0 is Finally Here!

https://news.itsfoss.com/gimp-3-release/

This is surely trivial to detect. If the number of pages on the site is greater than some insanely high number then just drop all data from that site from the training data.

It’s not like I can afford to compete with OpenAI on bandwidth, and they’re burning through money with no cares already.

Yeah sure, but when do you stop gathering regularly constructed data, when your goal is to grab as much as possible?

Markov chains are an amazingly simple way to generate data like this, and a little bit of stacked logic it’s going to be indistinguishable from real large data sets.

Imagine the staff meeting:

You: we didn’t gather any data because it was poisoned

Corposhill: we collected 120TB only from harry-potter-fantasy-club.il !!

Boss: hmm who am I going to keep…

The boss fires both, “replaces” them for AI, and tries to sell the corposhill’s dataset to companies that make AIs that write generic fantasy novels

AI won’t see Markov chains - that trap site will be dropped at the crawling stage.

You can compress multiple TB of nothing with the occasional meme down to a few MB.

When I deliver it as a response to a request I have to deliver the gzipped version if nothing else. To get to a point where I’m poisoning an AI I’m assuming it’s going to require gigabytes of data transfer that I pay for.

At best I’m adding to the power consumption of AI.

I wonder, can I serve it ads and get paid?

I wonder, can I serve it ads and get paid?

…and it’s just bouncing around and around and around in circles before its handler figures out what’s up…

Heehee I like where your head’s at!

“Markov Babble” would make a great band name

Their best album was Infinite Maze.

Some details. One of the major players doing the tar pit strategy is Cloudflare. They’re a giant in networking and infrastructure, and they use AI (more traditional, nit LLMs) ubiquitously to detect bots. So it is an arms race, but one where both sides have massive incentives.

Making nonsense is indeed detectable, but that misunderstands the purpose: economics. Scraping bots are used because they’re a cheap way to get training data. If you make a non zero portion of training data poisonous you’d have to spend increasingly many resources to filter it out. The better the nonsense, the harder to detect. Cloudflare is known it use small LLMs to generate the nonsense, hence requiring systems at least that complex to differentiate it.

So in short the tar pit with garbage data actually decreases the average value of scraped data for bots that ignore do not scrape instructions.

The fact the internet runs on lava lamps makes me so happy.

Nice one, but Cloudflare do it too.

The Arstechnica article in the OP is about 2 months newer than Cloudflare’s tool

Such a stupid title, great software!