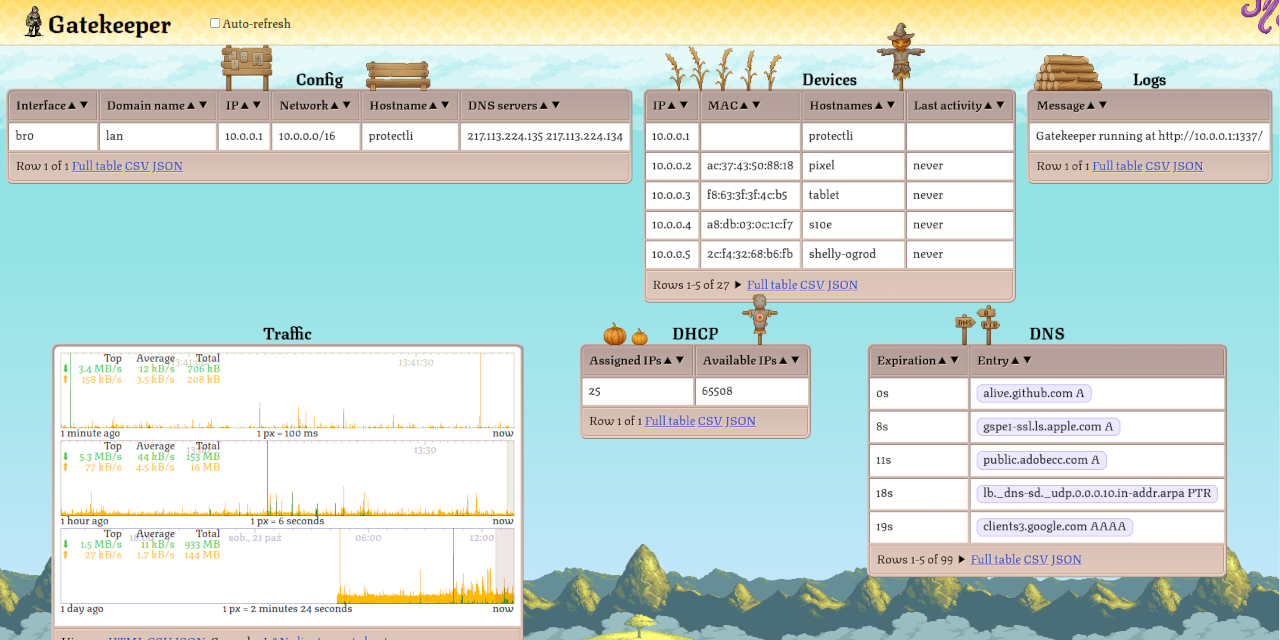

Hi all home network administrators :) Haven’t posted anything here since June, when I told you about Gatekeeper 1.1.0. Back then it was a pretty bare-bones (and maybe slightly buggy) DNS + DHCP server with a web UI with a list of LAN clients. Back at 1.1.0 Gatekeeper didn’t even configure your LAN interface or set up NAT rules. It used to be something like dnsmasq - but with a web UI.

I’ve been improving it for the past couple of months - simplified it a lot, fixed bugs, optimized internals, improved the looks & added a bunch of quality-of-life features. Now it’s a program that turns any linux machine into a home internet gateway. It’s closer to OpenWRT in single executable file.

One big thing that happend was that I’ve attempted to replace the ubiquitous nft-based NAT (where the kernel processes the packets according to a rule-based script) with nfqueue (where the kernel sends the packet to userspace so that it can be altered arbitrarily). I’ve expected this to be buggy & slow. Well, it was hell to implement but it turns out that it’s not slow at all. On the debug build my router can push 60GiB+ / second over TCP (over virtualized ethernet of course). And I’m not even using any io_uring magic yet. Quite honestly I don’t even know how to explain it since it’s slightly above the peak DDR4 transfer rate (I’m running dual channel DDR4-3200). Maybe the pages are not flushed to RAM & are only exchanged through CPU caches? Anyway I’m pretty excited because userspace access to all traffic opens a lot of new possibilities…

The first thing is NAT. By default Linux only supports symmetric NAT, which is pretty secure but is also fairly hard for the peer-to-peer protocols to pierce. There are some patches that make Linux full-cone but they’re not, and are not expected to become a part of the mainline kernel (at least according to OpenWRT forums). Now, since we have access to every packet we can take care of this ourselves. We can create a couple hash tables to track connections, alter the source & destination IP, recomputing the checksums if necessary. Suddenly we can have full-cone NAT, on any linux machine, without patching the kernel! At runtime it’s not as configurable as netfilter + conntrack but it’s a whole lot simpler - since now we can use a general purpose programming language rather than netfilter rules.

Another cool feature that we can now have are truly realtime traffic graphs. Summary of each packet traversing the network boundary is immediately streamed to the connected web UIs over WebSocket. This is way faster than the alternatives based on reading some /proc/ or /sys/ files every couple of seconds. Gatekeeper also aggregates the traffic from the last 24 hours between each pair of hosts into a histogram with 100ms resolution and allow clients to view it, scroll through it, compute stats, download as JSON or CSV. You can retroactively check which device talked with what IP, at what time with unprecedented resolution.

My next step is going to be capturing the traffic that goes through into a 5MB circular buffer (separate buffer for each LAN client) & downloading it as Wireshark-compatible pcap files. Computationally it’s almost free. IoT devices usually don’t transmit a lot of data. 5MB may actually cover months of traffic for the simpler ones. If any device is did anything weird, it will finally be possible to investigate it - even after it already happened.

Long-term Gatekeeper could do even more. For example offer assistance in setting up TLS MITM, perform some online grouping / analysis on the live traffic.

I still have some ground work to do - like automatically setting up Wireless LAN, bridgidng multiple interfaces into a single one and I think there may be a bug that causes crashes when checking GitHub for updates. But I wanted to share it sooner rather than later. I hope that despite its imperfections some of you will find it useful!

(I’ve had some issues with cross-instance posting. This is attempt #6)

In terms of types of users I agree with what you’re saying but I also think that there are some shades of gray in between. There are people who love to tinker and would manually configure every service on their router, compiling everything from scratch, reading manuals, understanding how things work (they’ll probably choose

dnsmasq,systemd-networkd,graphanaover Gatekeeper). In my experience this approach is pretty exciting for the first couple of years & then gradually becomes more and more troublesome. I think Gatekeeper’s target audience are the people who would like to take ownership of their network (and have some theoretical understanding) but don’t want to fully dive into the rabbit’s hole and configure everything manually.In terms of problem solved: I agree that Gatekeeper solves a similar problem. I think it’s different from those projects because it tightly integrates all of the home gateway functions. While this goes against the Unix philosophy, I think it creates some advantages:

Functions of home routers are conventionally spread out over many components (kernel & a bunch of independently developed userspace tools) which talk to each other. Whenever we want to create a cross-cutting feature (for example live traffic graphs) we must coordinate work between many components. We need to create kernel APIs to notify userspace apps about new traffic, create userspace apps to maintain a record of this traffic & a web interface to display it. It’s difficult organizationally. In a monolith, where all code is in one place, such cross-cutting features can be developed with less friction.

From the performance point the conventional approach is also less efficient. The tools must talk to each other. Quite often through files (logs & databases). It’s wearing down SSDs & causing CPU load that could be otherwise avoided. A tightly integrated monolith needs to write files only periodically (if ever) - because all data can be exchanged through RAM.

From the complexity standpoint the conventional approach is also not great because each of the tools needs to know how to talk with the others. This is usually done by administrator, configuring every service according to its manual. When everything is built together as a monolith, things can “just work” and no configuration is necessary.

Edit: Please don’t be offended by my verbosity. From your question I see that you know this stuff already but I’m also answering to the fresh “selfhosted” audience :)

Well explained. Thanks for spelling it out.

Thanks for the explanation, that really helped! I will follow this project!