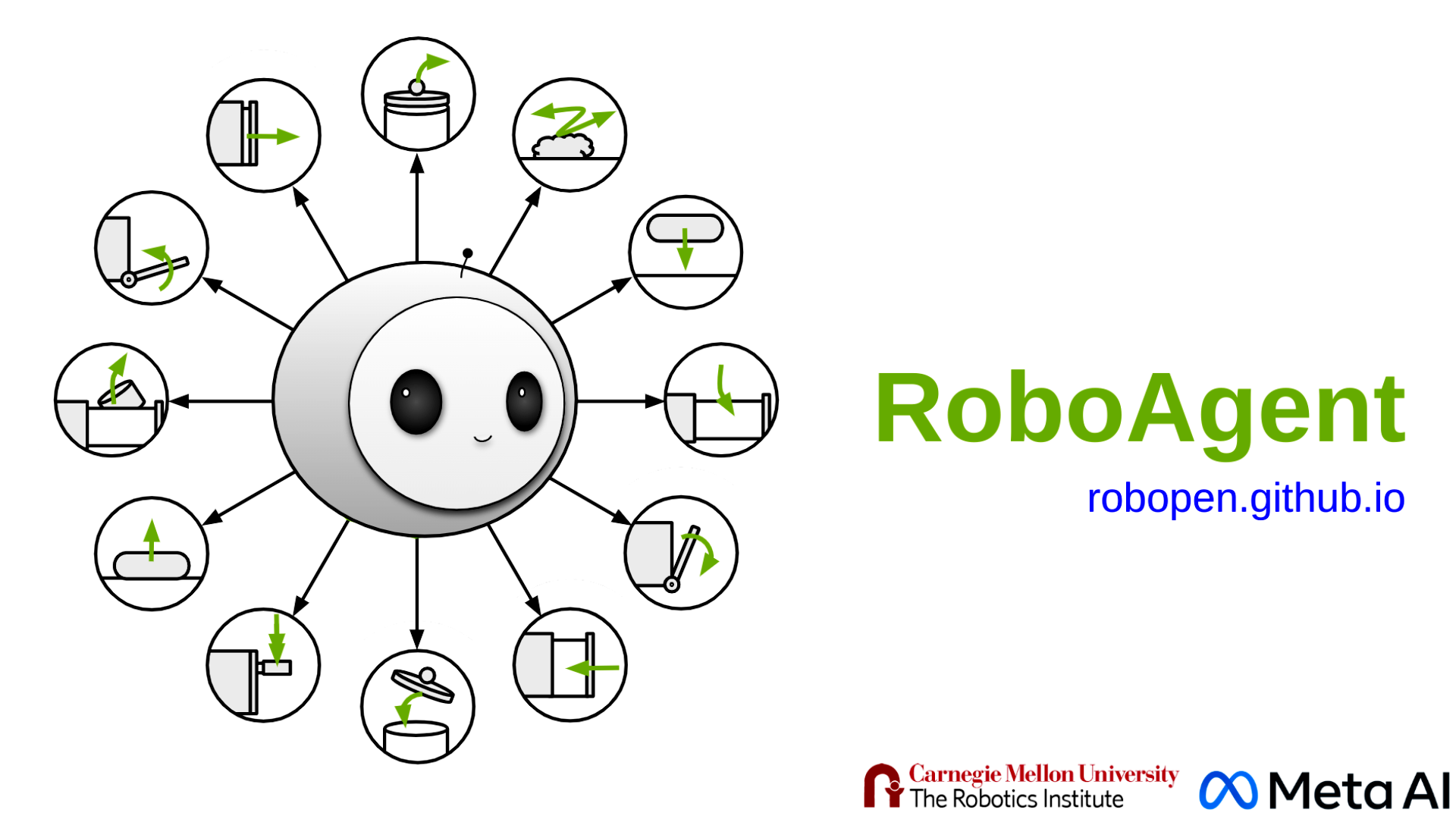

I’m actually working on this problem right now for my master’s capstone project. I’m almost done with it; I can have it generating a series of steps to try and fetch me something based on simple objectives like “I’m thirsty”, and then in simulation fetching me a drink or looking through rooms that might have a fix, like contextually knowing the kitchen is a great spot to check.

There’s also a lot of research into using the latest advancements in reasoning and contextual awareness via LLMs to work towards better more complicated embodied AI. I wrote a blog post about a lot of the big advancements here.

Outside of this I’ve also worked at various robotics startups for the past five years, though primarily in writing data pipelines and control systems for fleets of them. So with that experience in mind, I’d say we are many years out from this being in a reasonable product, but maybe not ten years away. Maybe.

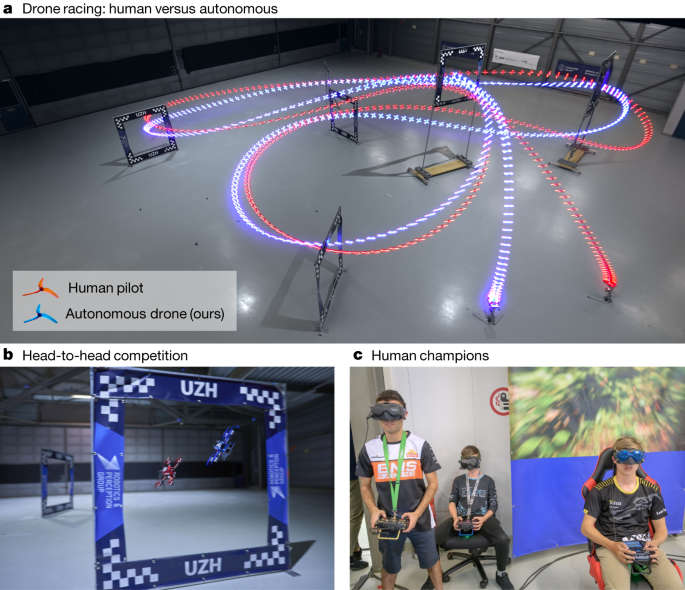

Boston Dynamic’s robots are works of art - the pinnacle of engineering - but its all designed movement. By this I mean the control systems, their movement plans - it is built and designed by experts in their field. It’s not quite as simple as “go from A to B and do some parkour on the way”. There’s a very large gap between “what is mechanically possible to do” and “Just let the robot figure out how to do that”.

Mechanically we’re ahead of software for manipulation and kinodynamic planning.