If they were just talking about Reddit, I’d assume something dodgy was going on connected with the IPO. But Quora is supposedly back from the dead too… Am I missing something glaringly obvious here?

Election is coming up, all Russian bots

It’s all kinds of bots; Russian, Chinese, Liberal, Conservative, but most of all its Reddits own bots meant to inflate traffic stats ahead of the IPO.

So what you’re saying is we should short the stock to make it big

Could even open a lawsuit and force reddit to investigate how much of their traffic is bots, immediately after the IPO

This is not financial advice… typically these things balloon fast, and maybe stay up a week or two before anyone buying is done buying and the price collapses to the point informed investors want in. You can also have people doing price support early (“a squid on the bid”) to avoid a depressing price collapse in the opening phase, but that’s something you have to feel from experience.

Judging by the trending posts, its mostly us liberal bots :).

And Israeli bots (probably IDF and Pro-Israelis too)

Seriously I just discovered this. Go search any random Gaza/Palestine/Israel post you will see -50 for someone saying “Israel has killed children and should stop killing children” and like +300 for “this article is so biased it’s taking about Israeli attacks but no mention of how Hamas is the real cause of this death”.

I’m not even going to bother to link because I want people to see for themselves rather than it looking like I’m selecting the worst if it.

yeah but that’s sadly how a lot of real people think on Reddit anyway.

It probably has a lot to do with their IPO as well. They want to look like they are doing well ahead of that.

That tired but always working deflection. Let me get a little creative. I’m pretty sure it’s underground blind mole rats this time.

theys r comin 4r us, I’m telling ya, mark my wurds. ya’ll bein minefukd while yous wer sleepin

How’s my russian? did I do it right?

Removed by mod

Mfers still believe in Russiagate despite everything… It’s time to accept they’re not Russian trolls, your country is just full of fascists.

Did something come out that disproves the existence of russian bots in social media?

- Washington Post: Russian trolls on Twitter had little influence on 2016 voters

- Jacobin: It Turns Out Hillary Clinton, Not Russian Bots, Lost the 2016 Election

- Matt Taibbi: Move Over, Jayson Blair: Meet Hamilton 68, the New King of Media Fraud The Twitter Files reveal that one of the most common news sources of the Trump era was a scam, making ordinary American political conversations look like Russian spywork

- Jacobin: Why the Twitter Files Are in Fact a Big Deal

- MSNBC Repeats Hamilton 68 Lies 279 Times in 11 Minutes

- Chris Hedges: Why Russiagate Won’t Go Away

From the Washington Post piece:

But the study doesn’t go so far as to say that Russia had no influence on people who voted for President Donald Trump.

- It doesn’t examine other social media, like the much-larger Facebook.

- Nor does it address Russian hack-and-leak operations. Another major study in 2018 by University of Pennsylvania communications professor Kathleen Hall Jamieson suggested those probably played a significant role in the 2016 race’s outcome.

- Lastly, it doesn’t suggest that foreign influence operations aren’t a threat at all.

And

“Despite these consistent findings, it would be a mistake to conclude that simply because the Russian foreign influence campaign on Twitter was not meaningfully related to individual-level attitudes that other aspects of the campaign did not have any impact on the election, or on faith in American electoral integrity,” the report states.

The overwhelmingly more significant threat is that US corporate social media are integrated with the propaganda machinery of the US government and the capitalist class. The purpose of things like Alliance for Securing Democracy’s Hamilton 68 and the Disinformation Governance Board is not to protect us from foreign influence but for the “intelligence community” to inject its own influence.

- Facebook Partners With Hawkish Atlantic Council, a NATO Lobby Group, to “Protect Democracy”

- Jessica Ashooh: The taming of Reddit and the National Security State Plant tabbed to do it

- A Reddit AMA Claiming To Be A Uyghur Quickly Exposes A CIA Asset Slandering China

- r/neoliberal was created by a neoliberal think tank » BPR Interviews: The Neoliberal Project

.

They have their eye on the fediverse now as well: Atlantic Council » Collective Security in a Federated World

This is all an extension of how traditional corporate media works, which has been understood for decades:

- Michael Parenti, 1986: Inventing Reality: The Politics Of The Mass Media

- Edward Herman & Noam Chomsky, 1988: Manufacturing Consent: The Political Economy of the Mass Media.

A five minute primer: Noam Chomsky - The 5 Filters of the Mass Media Machine

Liberals will cry for impossibly indisputable evidence then go silent when you actually manage to provide it.

This. Everything wrong in US is some bot army peddling propaganda at labor camps. Might even add the Atlantians to the list of foreign influencers before claiming US has more than a significant amount of fascists, racists, xenophobic assholes that already elected criminal as a president, and others war criminals without a shred of repercussions.

but whatever makes you sleep better at night.

Uh, actually yeah it did. Turns out they were just normal pro-russia conservatives. I thought the Cucker interview would have made that connection pretty obvious. They weren’t paid to post that kind of propaganda, they were doing it willingly.

These things are not mutually exclusive. The fact that Russian propaganda bots swayed a large percentage of American republican fascists in no way debunks the bots. It just means that it was an effective propaganda campaign.

I’m sorry I was hoping what I was asking would be more clear:

Did something specific, that you can link to disprove the existence of Russian bots in social media?

Sure but first you have to get me a link to an instance of evidence ever swaying someone’s opinion on the internet and not just turning into a debate about the validity of the evidence. EDIT: And the admin literally did that and you went quiet, you’re so fucking full of shit.

If I remember correctly the Senate Select Committee on Intelligence quietly released a bipartisan report some years back conforming the existence of Russian trolls in the 2016 election. I haven’t seen any evidence or reports released that they have ceased their attempts at manipulating social media since then.

I do agree though, a lot of the posts are just real people who know that hate gets more engagement and crazy people.

They did, but they determined the huge russian disinformation campaign was the work of like 5 accounts and 200k in ad-spend on facebook. In other words a wet fart.

Why can’t it be both? Russian social media engineers wouldnt have to try that hard. America’s full of a bunch of fascist capitalist warmongers with a majority of liberal sheep for citizens, ready to believe whatever truth they want to be more true than reality.

Perhaps Russia isn’t as involved as of late though? Perhaps Putin killed off all his social media engineers sending them to fight in Ukraine? I’m waiting for the reports to come in from field reporters finding Russian boy scout corpses on the battle field. That’s when you know he doesn’t have the resources to spend trying to influence American elections. He’s got bigger problems, like mandating Russian breeding schedules.

They are probably paying clickfarms.

Reddit probably isn’t, as that would be cooking their metrics and Huffman would get fucked by the long arm of the SEC. They might still be, Huffman loves Elon and Elon got away with tons of shit.

Advertisers are probably paying more content farms to astroturf it though.

Plus without the API, do you really think people just stopped scraping Reddit? They just run a headless Chrome instance now and I bet Reddit doesn’t look the gift horse of traffic in the mouth.

Advertisers are probably paying more content farms to astroturf it though.

Yup, in fact we just banned ~13 accounts tonight from a subreddit I’m still involved with. That’s just the ones we identified, and it’s only a medium sized subreddit

A user noticed that the responses to a post sounded a little off and reported it. Turns out there was a network of bots using generative AI to mix real academic advice (ex. “Go talk to the advising office”) with occasional subtle advertisements (ex. “I recommend using grammarly and (advertised service)”.

Once we caught on, we looked through the history of those accounts and gathered as many as we could identify and banned them all.

I don’t think this is Reddit’s doing, and they’re usually good about banning spam bots site wide once a mod report is made. Still, they benefit from increased activity and they have an incentive to do less of that. It was also much harder to notice the problem because of the AI generation. If a user didn’t explicitly report it, I probably wouldn’t have noticed

I highly suggest you ban what the were advertising and not just the account.

If advertiser’s realize the shady bot farms they deal with are causing any comment that mentions their product to be automatically deleted, they will stop.

This is going to be the Idiocratizing of the internet. AI is going to be training in itself with these unidentified posts and get dumber and dumber.

Let’s hope no one lets it have access to anything important…

It feels a little like how steel from before above ground nuclear testing, called low-background (or pre-war) steel because it isn’t contaminated is prized for building some sensors.

Pre AI information need to be preserved, otherwise we might not really know if the info we’re seeking is fact based in any way.

This is going to be the Idiocratizing of the internet.

93/94 was when I first got AOL on a 14.4k modem. I’m one of those shitty users!

We used to use gopher and college FTP sites to download warez as a freshman in HS, and then moved on to Hotline trackers.

I know, because I was there!

I was too. The internet was never for normies or businesses and between the two of them they’ve managed to turn it into a complete dumpster fire.

Except I can totally see them committing securities fraud in order to pump up the numbers. It seems very much like something they would do.

I think that’s what this part of the comment was about:

They might still be, Huffman loves Elon and Elon got away with tons of shit.

The SEC got its funding slashed by Trump - are they like the IRS now where they don’t have the resources to truly do the job anymore?

The API is not gone, and is still free for both “for non-commercial researchers and academics under our published usage threshold” and “for moderator tools and bots”

https://www.redditinc.com/blog/apifacts

There are several ways to add your personal API key to (modified) final versions of Sync, Relay, Infinity, and even Apollo on iOS to be able to continue to use those clients, however Reddit has changed how Reddit links work, so those methods are becoming more and more broken.

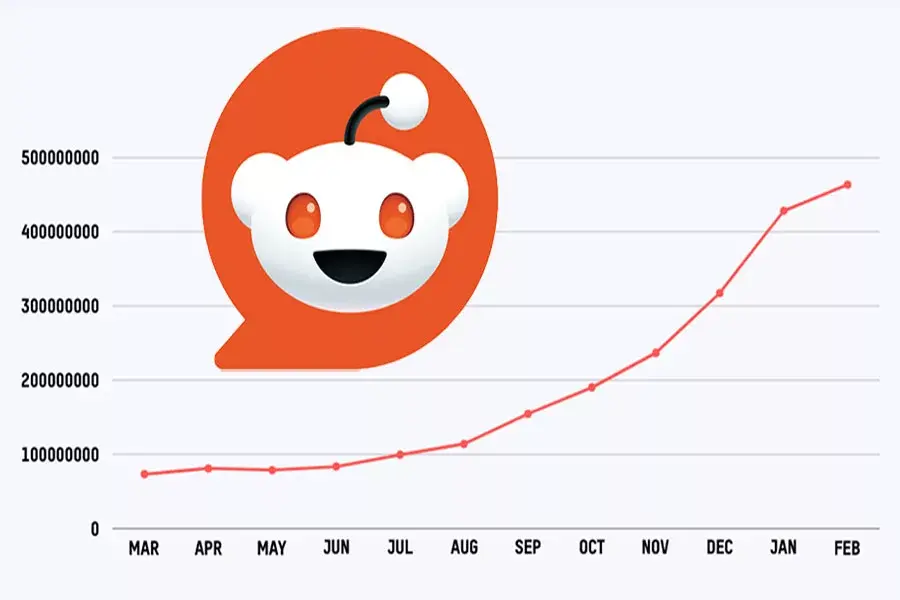

Did you guys read the article? It’s all about how since google and Reddit penned a deal to use Reddit to train google AI models, google is now massively pushing Reddit links in search results.

And their answer, ironically, is to avoid “Gen-AI garbage.”

But you should really read the article. It pissed me the fuck off. Because that sounds…massively illegal.

I wonder if Reddit user activity has noticeably increased. Probably not.

Like, this will help Reddit in the short term, and honestly is a good idea from a search perspective (how many queries have I manually appended “Reddit” to?), but it doesn’t necessarily help with the fundamentals of the platform.

You scratch my back, I scratch yours

I think I’ve comment this before but over the pandemic years I did a little experiment. Every day I bookmarked the obvious content reposting bot accounts on the first few pages of r/all. After a while I checked back on the accounts. The majority of them become cryptocurrency spam bots. A very small percentage spam random things. There was an extremely high success rate of picking out the bot accounts. Pretty much all them were except for maybe a handful.

spez is basically exit scamming with reddit. Whoever is buying the dataset is getting robbed blind. That’s if reddit inc isn’t being upfront behind closed doors. Maybe they are. After all reddit does have well over a decade of mostly organic activity. The recent data has to be absolute trash though.

It isn’t like you can’t otherwise get the older data if you really want though, pretty sure it’s on torrents. The newer stuff is all they have to sell.

Google wants the data to be exclusively licensed, so they can pursue any competing LLMs and sue them to death - I mean, develop a ‘moat’

It’s not really about the actual data access

I don’t buy that, given

-

All the effort Reddit has put into locking down data access

-

Google itself was behind the lawsuit establishing fair use for scraped datasets, and it’s looking likely that will be upheld

Would be happy to hear it if there’s reasons I’m not aware of that this is the intention though

-

As someone who still semi-frequents reddit, it’s mostly bots, more and more of which are clearly using some form of ChatGPT or another LLM. It’s actually kinda absurd, I’ve seen many a comment chains where it’s just different bots replying to each other, both pretending to be real people.

If bots actually do start frequenting Reddit, and they get hard to detect, the AI content generation will start poisoning itself. Isn’t that cool?

For me, the cool part is that the vast majority of people can’t tell anything has changed.

Also, we can be rather poisonous ourselves.

deleted by creator

I must say I’ve seen in increase of conversations on Reddit that seem like everyone involved has severe lead poisoning.

Nah, it’s always been like that.

Only if you count bots lol

Yeah this isn’t Reddit but more than 80% (>4/5) of Twitter is bots. It’s to the point where you can find any blue checkmark account, reply to them with a prompt, and more likely than not they’ll have a wacky and clearly autogenerated response. Sometimes they just reply things like “sorry, I can’t generate content that depicts violence” to random posts too.

Dead internet theory is almost a reality and I hate it. It’s already happened to Google search results / blogs.

Almost? It’s been a thing for awhile. Shit, Reddit got started by using bots to feign engagement. It’s just that it’s gotten so much easier and faster

I had my Reddit very heavily curated, my subs were mostly smaller subreddits. I was incredibly active and had my settings so that anything I voted on would not appear on my homepage. I got to see a ton of posts because of that.

Around 2021, I started noticing that reposts weren’t just people coming in and posting things we’ve seen a dozen times because they had no way to know it was a repost. It was bot networks that would take top posts and then other bot accounts would recreate the original post’s comment section. The accounts followed patterns and became really obvious to spot after a while.

The original tells were the bots taking really specific posts that only made sense in that context. Popular post from last Christmas? The bot doesn’t know what Christmas is, sees a popular post from a few months ago and reposts someone happy about their gifts in August. Look at this beautiful picture I took of the summer Alaskan wilderness this morning but it’s February. The photography subreddits were obvious because the bots would rotate the picture a few degrees which would sometimes ruin the picture’s aesthetic.

I’m not sure if it was just me spotting them easier or if they were really ramping up into 2022 but by the time they killed API access and I stopped using it, I think over 80% of posts were bots. Made leaving the site way easier.

Yeah, repost bots were out of control and places like freekarma4u helped them propagate for years with no interference from reddit. Would’ve been simple to shut that down if they were really worried about stopping bots but instead they ignored numerous reports, allowing the bots to run rampant.

Like Lemmy. Full with cross posting bots.

cross posting bots are a lot less problematic to me than bots designed to mimic human engagement to said cross posts

Lemmy is not even in the ballpark of oom that Reddit and western social media sits in. Lemmy has 40-50k real active users.

I’m doing my part!

No, you were not supposed to be the bot.

Bots are a fact of life, unfortunately.

Almost 50% of all internet traffic is bots.

It’s sort of become a bit of a meme to end every google question with ‘reddit’ to trick it into showing you an actual human response. I’m sure that’s been good for traffic

I’ve had to start limiting the date to pre-2023 to keep ai bs out of the results

Just add

before:2023to your search query BTW.Thank you!

Add what? Didn’t show up on mobile

add “before:2023” to your search query

That’s an excellent tip! I draw occasionally and finding references for animals is so much worse than it used to be.

I’ve heard some AI experts on Hard Fork suggest 80% of the Internet will be AI bot trash in 2 years.

Honestly wouldn’t surprise me. There’s already so much garbage when you google pretty much anything

Bots produce traffic as well, though. So it’s absolutely possible that traffic increased significantly. Just not in a meaningful way.

I wonder if there was some kind of technological revolution that made it exponentially easier to generate text that happened recently.

Good Post Theory

Subs picked to be “mainstream” get botted to death and every other sub is half dead, so not really. Quality fell off a cliff.

Simplest explanation is that the general public doesn’t give a shit and while Facebook is on the downturn (not sure if numbers can back that up) people need to go somewhere else. Maybe that is reddit right now, they got the marketing and content to get people on it.

I’d like to hear that crapbook is going downhill, but its share price says otherwise

Its share price doesn’t matter in this context, since Meta also owns Instagram, which is absolutely not going downhill at the moment.

Facebook however is losing active users, especially in the younger age ranges and even more pronounced in Europe and the US.

They also own WhatsApp which has a massive user base outside of the US.

But it’s not really a cash cow. At least I don’t know of any widespread attempts at monetization (yet).

WhatsApp is monetized by selling your private data, same as.FB

Enron’s share price was very high right up until the end, too. Share price is not necessarily a good indicator of underlying fundamentals

Stock price isn’t a representation of the current value of a company, it’s the projected value of a company down the line.

I cannot wait for reddit going public, it’s going to generate so much drama, that’s going to be soooo good.

Lemmy instances brace yourself

Im just sad my bank won’t let me buy puts without using my house or business as collateral

The article seems to suggest a change on the Google search algo and how Reddit pumps the SEO is to blame as it’s showing up in search rankings above other more relevant results.

I’m assuming “traffic” here is individual page visits, which would shoot up if people are just pulling up one page from a “how do I do X” type of search. I doubt this boost is coming from people sticking around, but I’m sure that’s not how Reddit will spin it.

This would be my guess- Reddit is more reliable for random queries than much of the internet, as AI propagates.

I see more and more suggested “my search Reddit” on Google even as I visit Redfit way less now

So Reddit is turning into Quora.

Painfully accurate.

In that it will soon to shit? Yes.

I do believe reddit pops up in my search results more frequently these days than it did a year ago, without any explicit prompting with ‘reddit’ keyword… (just based on my impression, though)

I honesty can’t search a damn thing and not have Reddit be the first result. I basically been using AI over search to fix things… probably as intended.

That or google bought a lot of shares ahead if IPO.

There are search addons able to filter out specific sites, if you’d like it.

A lot of AI LLMs have been trained on reddit…

Not saying its bad info sometimes. There’s a reason most of us used reddit. It just seems like its the new SEO optimized background noise now. It’s not what I’m looking for, and I rather avoid the site now.

Yes and no. No, it’s not Reddit doing it. Yes, Google is strongly favoring reddit results. They are combating AI content in this manner.

Google searches are becoming worthless more and more. This may be the beginning of the end for them unless users quickly adopt their generative search approach when they release it.

Yes. Google is strongly favoring both in search results in their feeble, and failing, attempt to combat AI.

Google has massive swing; there’s a whole industry around getting Google to prefer your low quality crap nobody wants to see over others’ low quality crap nobody wants to see.

If Google has finally figured out a metric to measure “helpfulness” of a website and punishes unhelpful websites, a bunch of dogshit that would have otherwise gotten top spots may have been banished to page 2.

Reddit results would naturally creep up because of that (and therefore get a lot more clicks), even if they didn’t change at all.Didn’t Reddit signpost that they’d signed a deal with Google over AI? Is Google driving visitors to Reddit in exchange and to their benefit?

So honest. So unbiased.

Google directly said a few months ago that they’re changing their algorithm to expose more Reddit content.

Source?

Google

The whole reason that Google exists today is that their PageRank algorithm was a great way to identify good content. At its basics, it worked by counting the number of pages that linked to a certain page. More incoming links meant the page was more useful. It didn’t matter how many relevant search terms you stuffed into your page. What matters was votes from other people, expressed in the form of linking to your page.

But, that algorithm failed for 2 reasons. One is that it became cheaper and easier to put up sites that linked to sites you wanted to promote. The other was that people stopped blogging on their own blogs, and stopped creating their own websites, and instead used walled gardens like Facebook, Twitter, Reddit, etc. That meant it was hard to measure links back to a site, and that it was easier to create fake links.

So, now it’s a constant war of SEO people vs. Google Search Quality people, and the Google people are losing. Sometimes there are brief victories for Google which result in good Reddit results appearing higher up. Then the SEO people catch up and either pollute Reddit and/or push Reddit links off the first page.

It would all be really depressing even if it weren’t for generative AI being used to pollute everything. With LLMs coming in and vomiting their content all over everything, we might be forced back to the bad old days of Yahoo where some individual human curated lists of good things and 99% of content was invisible.

I actually have noticed a lot more Reddit in my search results recently.

Not even close if we’re talking about current users or active contributers. After they shut down third party apps and sided with advertisers over mods there was a huge migration off platform to several other platforms. Many smaller subreddits are ghost towns and the biggest ones that are still active have a smaller participating community, less total votes, and changing norms.

It’s not just eternal September, it’s the same thing that happened when digg died in reverse where communities grew and changed because people were joining. Users are adding site:reddit.com or whatever to Google searches because of SEO general searches are an advertising dumpster fire, but those search results are going to degrade over time along with the site’s quality if they continue to make such shitty decisions for communities and users or people move to other ai based search tools.

Where did everyone go? I thought Lemmy was the new hangout but it still seems so small, even popular posts are only getting a handful of comments?

Well, the federation kinda spreads users out. Like I can’t login to kbin on my Lemmy apps but I can see kbin posts, but the vast majority of my time is on lemmy. IOW it’s harder to participate across instances so less people.

There are other platforms that are probably suffering some form of the same fate, they got an influx of ex-redditors, but not a high enough volume to really take off and get high participation rates.

I dunno, I prefer Lemmy/fediverse. The churn isn’t there so you can actually interact with people instead of competing with inane reddit quips and top comment retreads.

I think its that many people didnt really leave reddit, some migrated to lemmy, some to discord, some to other small sites, and some just quit that style of website.

Lemmy definitely is still pretty small, but i think its growing pretty well (i remember checking it out years ago and it being a super tiny niche site). It takes time for things to set up & for users to get comfortable and grow communities they care about. Organic growth is slow.

For me, Lemmy content is better in every way, EXCEPT for local subs / communities. I really miss my well populated, engaged local subs.

It feels much more filled out than after the initial exodus. Smaller/niche communities are pretty bare, though.

tiktok? ffffff

Taking a cursory look I feel like posts still aren’t being engaged with like they used to. I remember seeing posts with 100,000 upvotes very regularly on the front page, but you really don’t see that anymore. Yeah maybe they tweaked their calculations but why make your site look like it’s not as engaging as before right before a major IPO offer?