Oh ez, that’s only 17 orders of magnitude!

If we managed an optimistic pace of doubling every year that’d only take… 40 years. The last few survivors on desert world can ask it if it was worth it

Rather amusing prediction that despite the obscene amount of resources being spent on AI compute already, it’s apparently reasonable to expect to spend 1,000,000x that in the “near future”.

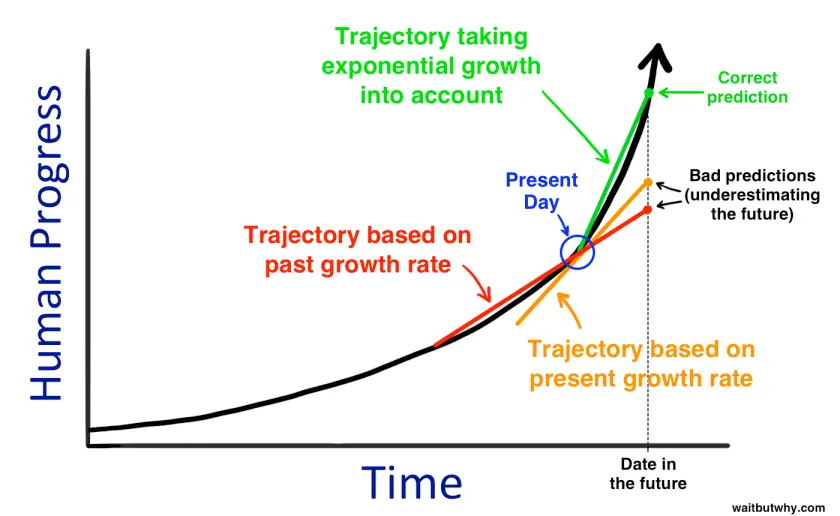

Doubling every a year is a conservative estimate though.Almost all technological progress has been exponential rather than linear. It would be bizar for ai not to do the same.

Edit: i think its going to be hyperbolic growth rather then exponential but thats a personal prediction.

I don’t think you’ve understood what doubling every year means…

You’re right, i mixed up exponential growth with hyperbolic growth where the rate of growth itself increases exponentially.

Truly sorry for that

I still think we may see this kind of growth with ai but my Comment was in error and I’ve since changed it.

What these people don’t realize is you’re never gonna get AGI by just feeding a machine an infinite amount of raw data.

You’re right. We should move onto feeding it orphans

Check out my new startup at modestproposal.ai

Oh, that’s why the orphan crushing machine exists. Completely realistic, actually.

That reminds me, wonder if all the mods already got updated for the new version of rimworld.

You sound very confident of that. Have you tried it?

There might actually be nothing bad about the Torment Nexus, and the classic sci-fi novel “Don’t Create The Torment Nexus” was nonsense. We shouldn’t be making policy decisions based off of that.

wild

Yes, we know (there are papers about it) that for LLMs every increase of capabilities we need exponentially more data to train it. But don’t worry, we only consumed half the worlds data to train LLMs, still a lot of places to go ;).

Interesting. I recall a phenomenon by which inorganic matter was given a series of criterion and it adapted based on changes from said environment, eventually forming data which it then learned from over a period of millions of years.

It then used that information to build the world wide web in the lifetime of a single organism and cast doubt on others trying to emulate it.

But I see your point.

humans are just like linear algebra when you think about it

Not me dawg, I am highly non linear (pls donate to my gofundme for spinal correction)

there really is no limit on how bad an argument you types will leap to defend lol

Which at no point involved raw data. Laymen hubris.

deleted by creator

My dog does linear algebra everytime he pees on a fire hydrant so that he only pees for the exact amount of time needed. Similarly, when I drain my bath tub, it acts as a linear algebra machine that calculates how long it takes for the water to drain through a small hole.

Is this a fun way to look at the world that allows us to more readily build computational devices from our environment? Definitely. Is it useful for determining what is intelligence? Not at all.

Could you not make these kinds of stupid arguments just to score debate points?

deleted by creator

Where the fuck was the insult? Wild

You’re the one making incoherent illogical driveby comments, clown

deleted by creator

Yes, and that was a stupid argument unrelated to the point made that evolution used this raw data to do things, thus raw data in LLMs will lead to AGI. You just wanted debate points for ‘see somewhere there is data in the process of things being alive’. Which is dumb gotcha logic which drags all of us down and makes it harder to have normal conversations about things. My reply was an attempt to make you see this and hope you would do better.

I didn’t call you stupid, I called the argument stupid, but if the shoe fits.

E: the argument from the person before you ‘evolution was created us with a lot of data and then we created the internet’ is also silly of course, as if you just go ‘well raw data created evolution’ then no matter how we would get AGI (say it is build out of post quantum computers in 2376) this line of reasoning would say it comes from raw data, so the whole conversation devolves into saying nothing.

No no see, since everything is information this argument totally holds up. That one would need to categorize and order it for it to be data is such a silly notion, utterly ridiculous and unnecessary! Just throw some information in the pool and stir, it’ll evolve soon enough!

it was straight up “not even wrong”

deleted by creator

The sensory inputs are a continuous stream of environmental data.

cool graph what’s the x axis

Bitcoin.

Lol

Or: Let’s not do that at a time where our energy consumption is literally killing the planet we live on.

You don’t understand, after we invent

godAGI all our problems are solved. Now step into the computroniuminator, we need your atoms for more compute.Yeah I don’t see why people are so blind on this. Computation is energy-intensive, and we are yet to optimize it for the energy. Yet, all the hopes…

We do optimize, it’s just that when you decrease the energy for computations by half, you just do twice the computations to iterate faster instead of using half the energy.

that looks like someone used win9x mspaint to make a flag, fucked it up, and then fucked it up even more on the saving throw

Any vexollologist around to confirm this?

vexologist here. This certainly is vexing.

Surprised this isn’t a bluecheck.

But maybe it’s not visible.

deleted by creator

Don’t sell me potential if you’re not a battery manufacturer.

i implore the tech nerds to learn a modicum of biology before making very confident statements.

doesn’t seem like it has great potential

did you even experience a single conscious thought while writing that? what fucking potential are you referring to? generating reams of scam messages and Internet spam? automating the only jobs that people actually enjoy doing? seriously, where is the thought?