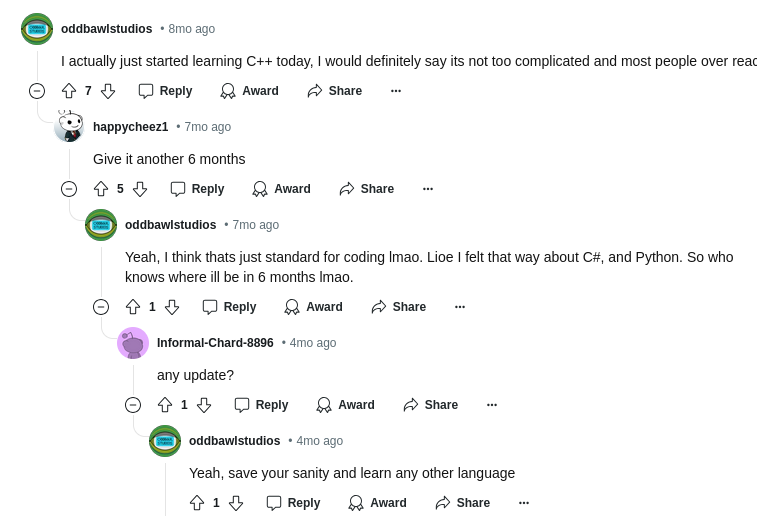

Incase it doesn’t show up:

“Give it six months”

It only needed 3!

Particularly unexpected, because 3! = 6.

That’s because C++ is such a high performance language, it gets things done faster

7m - 4m = 3m

My bad. I was going off OP first comment at 8 months and not the reply at 7 months.

I came back to be like actually no, but then I was like oh I’m the dumb dumb.

deleted by creator

Rust’s cargo is great, I’d say it would be best to make the switch sooner rather than later once your code base is established. The build system and tooling alone is a great reason to switch

deleted by creator

I’m a gameplay programmer who have worked with Unity and Unreal and I’ve experiment with Rust for gamedev(though only for hobby projects) and for regular code. My conclusions so far is that Rust sucks for gameplay code, for most other things it’s kinda nice.

The biggest reason is that it’s much harder to write prototype code to test out an idea to see if it’s feasible and feels/looks good enough. I don’t want to be forced to fully plan out my code and deal with borrowing issues before I even have an idea of if this is a good path or not.

I would say though that because you are using ECS stuff it is at least plausible to do in Rust but at least for my coding/development style it still isn’t a good fit.

The biggest reason is that it’s much harder to write prototype code to test out an idea to see if it’s feasible and feels/looks good enough. I don’t want to be forced to fully plan out my code and deal with borrowing issues before I even have an idea of if this is a good path or not.

There are options for this with Rust. If you wanted to use pure Rust you could always use unsafe to do prototyping and then come back and refactor if you like it. Alternatively you could write bindings for C/C++ and do prototyping that way.

Though, I will say that this process gets easier as you gain more experience with Rust memory management.

Not really. Unsafe doesn’t allow you to sidestep the borrow checker in a decent way. And even if you do it the Rust compiler assumes non aliasing and breaking that will give you loads of unexpected problems that you wouldn’t get in a language that assumes aliasing…

Testing something that only has side effects to the local scope is probably not too hard but that isn’t the most common case for gameplay code in my experience…

Going through another language basically has the same issues as unsafe except it’s worse in most ways as you’d need to keep up to date bindings all the time plus just the general hassle of doing it for something that could have been a 10 min prototype with most other setups…

Now sure it’s possible that I would have better result after doing even more rust, especially with some feedback from someone who really knows it but that doesn’t really change anything in just general advice to people who is already working on something in C++ as they likely won’t have that kind of support either.

Those are fair points. I haven’t used it for a little while and forgot the exact usage of unsafe code. I love Rust, but I totally agree that it’s a rough language for game dev. Especially if you’re trying to migrate an existing project to it since it requires a complete redesign of most systems rather than a straight translation.

Unsafe doesn’t let you just ignore the borrow checker, which is what generally tripped me up when learning to write rust.

That’s fair, I honestly haven’t used it in a while and forgot the real usage of unsafe code. As I said to another comment, it is a really rough language for game dev as it necessitates very different patterns from other languages. Definitely better to learn game dev itself pretty well first in something like C++, then to learn Rust separately before trying game dev in Rust.

deleted by creator

Story by a game dev who gave up on Rust after 3 years https://loglog.games/blog/leaving-rust-gamedev/

I’m seriously considering dropping everything and jumping to Rust because of Cargo.

Well if you’re into game dev, ECS and Rust, there’s like a 99% chance you know of it, but just in case you don’t: We have bevy, now with an extra full-time dev (Alice, who’d been working hard at it for years, I think she’s a bigger contributor than the author himself at this point lol)

deleted by creator

Brackeys started a series on Godot recently. If you are writing a smaller game GDscript looks attractive and far simpler.

They’re mucking it about a little though, like that post when checking if types don’t match ‘! value is type’ can now be ‘value is not type’ which is more readable but not as logical in terms of the language.

Being a Python simp, I find GDscript just different enough to nag. There’s a lot of QoL stuff they don’t have and aren’t (currently) looking to add in order to keep the language simple. Honestly has me looking to use C# instead.

Being a Python simp, I find GDscript just different enough to nag. There’s a lot of QoL stuff they don’t have and aren’t (currently) looking to add in order to keep the language simple. Honestly has me looking to use C# instead.

So things like abstract classes are mostly absent from my codebase.

I believe the consensus nowadays is that abstract classes should be avoided like the plague even in languages like Java and C#.

I have not heard this consensus. Definitely inheritance where the base class holds data or multiple inheritance, but I thought abstract was still ok. Why is it bad?

In 99% of the cases, inheritance can easily be replaced with composition and/or interfaces. Abstract classes tend to cause hard dependencies that are tough to work with.

I’m not sure why you would use abstract classes without data. Just use interfaces.

How do you implement an interface in C++ without an abstract class?

Ask Bjarne to add interfaces enough many times until he gives in.

On a more serious note, I’m not exactly sure what the best C++ practice is. I guess you just have to live with abstract classes if you really want interfaces.

An abstract class with no member variables serves the same purpose in C++.

The only problem is to ensure the entire team agrees to only use it like an interface and nothing else. But I guess that’s the only proper way to do it in C++, for now.

I know at least three ways, one of them involves variadic macros.

You don’t even need to look that far, take any sufficiently aged library, like OpenGL.

It was rhetorical.

Yet I still had an urge to explain an obvious thing. Because it’s C++, so everyhing goes. There are even tools to auto-generate C++ interfaces, because of course someone decided that C++ is inadequate and must be improved using some kind of poorly-documented ad-hoc extension language on top of C++.

Say List is an interface.

You have implementations like ArrayList and LinkedList.

Many of those method implementations will differ. But some will be identical. The identical ones go in the abstract base class, so you can share method implementation inheritance without duplicating code.

That’s why.

If the lists have shared components then that can be solved with composition. It’s semantically the same as using abstract classes, but with the difference that this code dependency doesn’t need to be exposed to the outside. This makes the dependency more loosely coupled.

In my example, how is the code dependency exposed to the outside? The caller only knows about the List interface in my example.

In your example, the declaration of ArrayList look like:

public class ArrayList extends AbstractList implements List { }The dependence on AbstractList is public. Any public method in AbstractList is also accessible from the outside. It opens up for tricky dependencies that can be difficult to unravel.

Compare it with my solution:

public class ArrayList implements List { private AbstractList = new AbstractList(); }Nothing about the internals of ArrayList is exposed. You’re free to change the internals however you want. There’s no chance any outside code will depend on this implementation detail.

Perhaps we have a terminology mismatch, I tend to use abstract class and interface interchangeably. I’m not sure it’s possible to define a class interface in c++ without using inheritance, what kind of interface are you referring to that doesn’t use inheritance?

deleted by creator

I agree, my terms aren’t perfect, but as you stated there isn’t really such a thing as an interface in c++, traditionally this is achieved via an abstract base class which is what I meant by using them interchangeably.

I know there are many things you can do in c++ to enforce an interface, but tying this back to the original comment that inheritance is objectively bad, I don’t think there’s any consensus that this is true. Abstract base classes (with no data members) and CRTP are both common use cases of inheritance in modern C++ codebases and are generally considered good design patterns.

deleted by creator

I don’t think it’s what the person you’re replying to meant, but template metaprogramming in modern c++ allows the use of “duck typing” aka “static polymorphism” where you can code against an interface without requiring inheritance.

Typically this is done with CRTP which does require inheritance. But I agree, you can do some meta programming or use concepts which can enforce interfaces in a different way. But back to the original comment that interfaces via inheritance are objectively bad, I don’t think there’s any consensus that this is true. And pure virtual interfaces and CRTP are both common use cases of inheritance in modern C++ codebases and are generally considered good design patterns.

The way I was taught was that you usually start off with only an interface and then implementing classes, and then once you have multiple similar implementations it could then make sense to move the common logic into an abstract class that doesn’t get exposed outside of the package

I usually break it out using composition if that’s ever needed. Either by wrapping around all the implementations, or as a separate component that is injected into each implementation.

After you’ve done some languages, they all look the same. Yes, some have interesting features like the indent-based blocking of Python, and I’ll have to look up if the new language has “else if”, “elsif”, “elif”, or whatever, but als long as it is coming from the family of ALGOL-like languages, it does not matter much. You’ll learn the basic functions needed to get around, and off you go.

Just a few weeks ago, I started learning Python. Yes, this indenting takes some time to get used to. My son does Python for about a year now - he started with it at university. Maybe ten days after I started learning, I invited him to have a look at my first Python program. I have no idea what he expected. A “Hello, World” with a few extra features, maybe? Definitely not the 2.5k lines app I had written in my spare time, with GUI, databases, harvesting data from a web site with caching, and creating PDF files with optimized layout for the data I processed. In the end, it was just another programming language.

I guess you’ve never seen some of the 10-page template errors C++ compilers will generate. I don’t think anything prepares you for that.

I’ve seen way worse. Imagine a project that uses C preprocessor structures to make a C-compiler provide a kind-of C++. Macros that are pages long, and if you forget a single bracket anywhere, your ten pages look like a romance novel.

Or VHDL synthesis messages. You’ve got no real control over them, 99.9% of the warnings are completely irrelevant, but one line in a 50k lines output could hint at a problem - if you only found it.

So far, the output of C or C++ compilers (except for the above-mentioned project) has not been a problem or me, but I’m doing this for about 40 years now, so I’ve got a bit of experience.

Yep, sadly I’ve been exposed to a few such codebases before. I certainly learned a lot about how NOT to design a project.

You’ve been at it longer than I have, but I’ve already had coworkers look at me like I’m a wizard for decoding their error message. You do get a feel for where the important parts of the error actually are over time. So much scrolling though…

You do get a feel for where the important parts of the error actually are

Yes, after decades of scanning large pages of text - code, errors, logs, search results, etc - a programmers ability to apply pattern recognition to screens of letters can be truly remarkable.

All I see now is blonde, brunette, redhead.

- Cypher, The Matrix

Yes, I have my share of coworkers asking me when they run into problems, too. They even ask me when they have Windows problems. And I don’t do Windows - I do Linux and embedded systems.

I had to do a module programming in VHDL for my EE degree.

Every time I see it mentioned anywhere I have a compulsion to scream: FUCK VHDL AND ITS FUCKING ERRORS! NO YOUR ANALYSIS & SYNTHESIS IS UNSUCCESSFUL!

I did not pursue a career in electronics…

One of the key problems of learning VHDL at universities is that most teachers there are amazingly clueless about the language. Not only do you need a bit of a different mindset (you do not program, you define), but their knowledge of language and systems is stuck in the last century.

When I was a regular in a VHDL group on the site we don’t mention here, we regularly had students who got taught techniques that are obsolete or at least deprecated since 1989.

I’ve not had those while working with concurrent programs with c++ for over a year. Pointers, QT programming, non-qt backend programming, coding an engine to work with computer vision runners (openvino mostly), image management (more pointers)… Idk, this is gonna sound rude but just code better? Most of my errors were segfaults, I have had to plug the debugger and/or tons of prints and I made it work.

If you want to see giant error logs, check pyspark errors. But even those have the relevant line of info and then all the rest of the garbage info that no one really needs, like any other language.

It really depends what you’re doing. The last big project I did with C++ templates was using them to make a lot of compile-time guarantees about concurrency locks so they don’t need to be checked at runtime (thus trading my development time for faster performance). I was able to hide the majority of the templates from users of the library, and spent extra time writing custom static_assert messages.

C++ templates are in fact a compile-time turing complete language, as crazy as that sounds.

Yeah this only really applies to Algol style imperative languages. Dependent types and say stack languages like idris and apl are dramatically different in their underlying axioms.

Indeed. I have done languages like Prolog and Forth, too, and have actually written a bit in APL ages ago. Yes, they are different, but in the end, it just adds a little bit of complexity. The underlying algorithms are universal, just the methods and structures to achieve them differ. Actually, the first programming language I have written was a simplified Forth derivate - in 6510 Assembler.

I didn’t even know about the Python indentation thing until I was practically done learning it! I’m just used to copying whatever indentation scheme my coworkers are using, for consistency.

I’m interested in specifically what your first program did?

Like what data is it harvesting and how is it showing that in PDFs or should I say why?

The software gets data from a website named bricklink.com, where one can buy and sell LEGO bricks and sets.

The main view holds a list of bricks I’ve selected from the large range available. In a requester to add parts, I can select a certain brick from the list of existing bricks by first chosing a category (e.g. “Bricks”) in the leftmost column, then chosing it’s shape (e.g. “Brick 2x4”) in the middle one, and then selecting the color (of the known existing colors for this brick, e.g. “Black”) in the right column. On all three selections I can multi-select and sort, which allows me to select e.g. a number of different Bricks, then sort the last view by color, and multiselect those bricks in the color I need. OK’ing the requester add the part(s) to my list.

The list that shows all the properties (including when this part was in production, how much a single brick of it weighs, as well as mold codes and article numbers). From there, I can choose some bricks (usually 15 in a go) to print, which produces a PDF with 15 labels on a double-sided A4-paper with cut-marks on one side. I cut them along the cut marks and put them into the bag with the coresponding part. This is quite helpful, if you consider a box with bags all containing e.g. black parts and bad lighting conditions in the storage room. Alternatively, I can print a double-sided paper with four larger cards to cut, which I laminate and use for marking boxes when I have larger amounts of one brick shape and color.

I can (and do) export those bricks to an export folder as CSV once I’ve printed the labels. In a future version of the software, I will be able to take a bag or box of parts from my collection, select it in my software via it’s article number, and derive an approximate count by weighing them (therefor the parts weight) to get an approximate inventory.

Hey, thanks for explaining the project for me. That sounds fascinating, is it public? Not that I want to steal it, I never got into Lego, just would like to see exactly how it works.

It also begs the question of how much Lego you actually have lol.

I’ve been thinking of building Lego when I’m sad but it seems so expensive.

No, the project is still in its early stages, far from what I would publish.

Regarding the amount of LEGO, well, if I write a resource management and inventory system, you can imagine that it is a bit more than a handfull. My current estimates are around one million bricks, give or take a few hundred k. One of the reason to inventorize it…

Thanks again.

Do you make money with Lego or is it purely a hobby. Apologies for the questions I’m inherently curious and this is very interesting.

I don’t make money with it, on the contrary - my son is a bit more direct here and claims I’m wasting money ;-) It is just a hobby. OK, a big one. I build my own models for fun and exhibit them at shows and events.

And: Curiosity is good. It kept the human race advancing.

Thanks again.

I would say that it isn’t wasted money if it’s something you enjoy and brings you pleasure or satisfaction.

Definitely not the 2.5k lines app

MVC can be a great experience, especially with python dictionaries.

Learning how to get models and views together took some time, but after the second refactoring that week I managed to have neat objects for each MVC with clean interfaces. My biggest source in the app defines a requester with three columns of lists: a global category, then parts from that category, and finally the available colors for that part. Each of those views is an object, their interacting logic is an object, and finally the actual requester is an object, and this makes thing easy to handle.

Great!

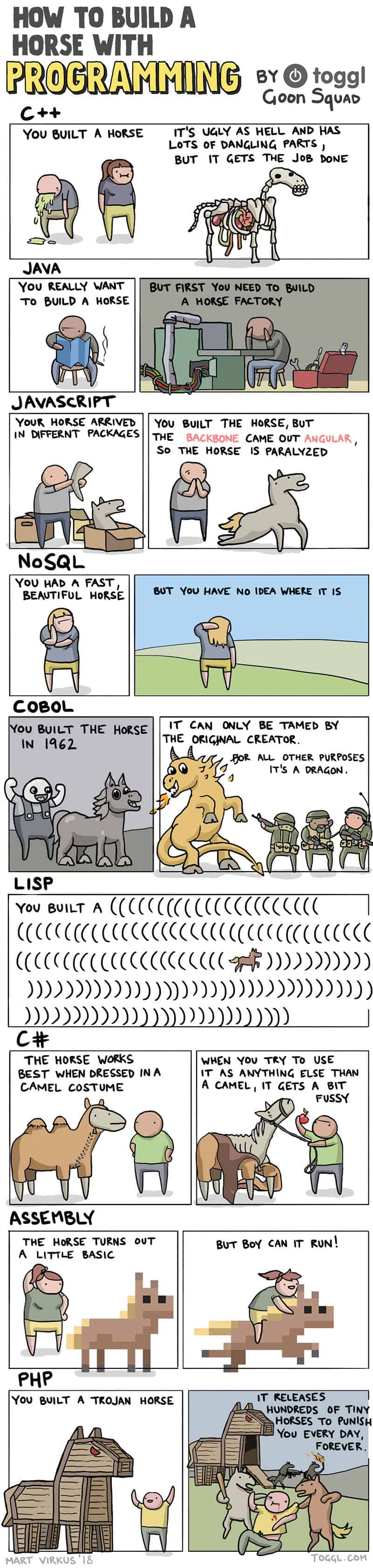

Jokes aside, I struggle more with abominations like JavaScript and even Python.

Do you have a minute for our lord and savoir TypeScript?

As long as it can distinguish between int and uint - yesss!

TypeScript is still built on JavaScript, all numbers are IEEE-754 doubles 🙃

Edit: Actually I lied, there are BigInts which are arbitrarily precise integers but I don’t think there’s a way to make them unsigned. There also might be a byte-array object that stores uint8 values but I’m not completely sure if I’m remembering that correctly.

Not only is there a UInt8Array, there’s also a bunch of others: https://developer.mozilla.org/en-US/docs/Web/JavaScript/Reference/Global_Objects/TypedArray#typedarray_objects

Python has its quirks, but it’s much much cleaner than js or c++, not fair to drag it down with them imo

I think the thing with C++ is they have tried to maintain backward compatibility from Day 1. You can take a C++ program from the 80s (or heck, even a straight up C program), and there’s a good chance it will compile as-is, which is rather astonishing considering modern C++ feels like a different language.

But I think this is what leads to a lot of the complexity as it stands? By contrast, I started Python in the Python 2 era, and when they switched to 3, I was like “Wow, did they just break hello world?” It’s a different philosophy and has its trade-offs. By reinventing itself, it can get rid of the legacy cruft that never worked well or required hacky workarounds, but old code will not simply run under the new interpreter. You have to hope your migration tools are up to the task.

even a straight up C program

There were breaking changes between C and C++ (and some divergent evolution since the initial split) as well as breaking changes between different releases of C++ itself. I am not saying these never happened, but the powers that be controlling the standard have worked hard to minimize these for better or worse.

If I took one of my earliest ANSI C programs from the 80s and ran it through a C++23 compiler, I would probably need to remove a bunch of

registerstatements and maybe check if an assumption of 16-bitintis going to land me in some trouble, but otherwise, I think it would build as long as it’s not linking in any 3rd party libraries.

The terse indexing and index manipulation gets a bit Perl-ish and write-only to me. But other than that I agree.

deleted by creator

but I don’t like the indentation crap

Do you not use indentation in other languages?

@UndercoverUlrikHD @unionagainstdhmo

It’s a big difference if indentation is a purely cosmetic thing or an integral, functional requirement of a language.It’s just forces what should be standard practice anyway though. Poorly formatted code in languages relying on curly braces is far more annoying to parse in my opinion.

deleted by creator

Python is just glorified shell scripting

Absolutely not, python is an actual programming language with sane error handling and arbitrarily nestable data structures.

I don’t like the indentation crap

Don’t be so superficial. When learning something, go with the flow and try to work with the design choices, not against them.

Python simply writes a bit differently: you do e.g. more function definitions and list comprehensions.

deleted by creator

C is dangerous like your uncle who drinks and smokes. Y’wanna make a weedwhacker-powered skateboard? Bitchin’! Nail that fucker on there good, she’ll be right. Get a bunch of C folks together and they’ll avoid all the stupid easy ways to kill somebody, in service to building something properly dangerous. They’ll raise the stakes from “accident” to “disaster.” Whether or not it works, it’s gonna blow people away.

C++ is dangerous like a quiet librarian who knows exactly which forbidden tomes you’re looking for. He and his… associates… will gladly share all the dark magic you know how to ask about. They’ll assure you, oh no no no, the power cosmic would never turn someone inside-out, without sufficient warning. They don’t question why a loving god would allow the powers you crave. They will show you which runes to carve, and then, they will hand you the knife.

This is so believable. You copy a few examples out of a textbook using cout and cin and it seems reasonably inline with other languages.

A friend of mine whose research group works on high throughout X-ray Crystallography had to learn C++ for his work, and he says that it was like “wrangling an unhappy horse”.

I’m not sure how I feel about someone controlling an X-ray machine with C++ when they haven’t used the language before… At least it’s not for use on humans.

Yep, I learned about this exact case when I got my engineering degree.

He doesn’t directly control anything with C++ — it’s just the data processing. The gist of X-ray Crystallography is that we can shoot some X-rays at a crystallised protein, that will scatter the X-rays due to diffraction, then we can take the diffraction pattern formed and do some mathemagic to figure out the electron density of the crystallised protein and from there, work out the protein’s structure

C++ helps with the mathemagic part of that, especially because by “high throughput”, I mean that the research facility has a particle accelerator that’s over 1km long, which cost multiple billions because it can shoot super bright X-rays at a rate of up to 27,000 per second. It’s the kind of place that’s used by many research groups, and you have to apply for “beam time”. The sample is piped in front of the beam and the result is thousands of diffraction patterns that need to be matched to particular crystals. That’s where the challenge comes in.

I am probably explaining this badly because it’s pretty cutting edge stuff that’s adjacent to what I know, but I know some of the software used is called CrystFEL. My understanding is that learning C++ was necessary for extending or modifying existing software tools, and for troubleshooting anomalous results.

Neat, thanks for sharing. Reminds me of old mainframe computers where students and researchers had to apply for processing time. Large data analysis definitely makes sense for C++, and it’s pretty low risk. Presumably you’d be able to go back and reprocess stuff if something went wrong? Or is more of a live-feed that’s not practical to store?

The data are stored, so it’s not a live-feed problem. It is an inordinate amount of data that’s stored though. I don’t actually understand this well enough to explain it well, so I’m going to quote from a book [1]. Apologies for wall of text.

“Serial femtosecond crystallography [(SFX)] experiments produce mountains of data that require [Free Electron Laser (FEL)] facilities to provide many petabytes of storage space and large compute clusters for timely processing of user data. The route to reach the summit of the data mountain requires peak finding, indexing, integration, refinement, and phasing.” […]

"The main reason for [steep increase in data volumes] is simple statistics. Systematic rotation of a single crystal allows all the Bragg peaks, required for structure determination, to be swept through and recorded. Serial collection is a rather inefficient way of measuring all these Bragg peak intensities because each snapshot is from a randomly oriented crystal, and there are no systematic relationships between successive crystal orientations. […]

Consider a game of picking a card from a deck of all 52 cards until all the cards in the deck have been seen. The rotation method could be considered as analogous to picking a card from the top of the deck, looking at it and then throwing it away before picking the next, i.e., sampling without replacement. In this analogy, the faces of the cards represent crystal orientations or Bragg reflections. Only 52 turns are required to see all the cards in this case. Serial collection is akin to randomly picking a card and then putting the card back in the deck before choosing the next card, i.e., sampling with replacement (Fig. 7.1 bottom). How many cards are needed to be drawn before all 52 have been seen? Intuitively, we can see that there is no guarantee that all cards will ever be observed. However, statistically speaking, the expected number of turns to complete the task, c, is given by:

where n is the total number of cards. For large n, c converges to n*log(n). That is, for n = 52, it can reasonably be expected that all 52 cards will be observed only after about 236 turns! The problem is further exacerbated because a fraction of the images obtained in an SFX experiment will be blank because the X-ray pulse did not hit a crystal. This fraction varies depending on the sample preparation and delivery methods (see Chaps. 3–5), but is often higher than 60%. The random orientation of crystals and the random picking of this orientation on every measurement represent the primary reasons why SFX data volumes are inherently larger than rotation series data.

where n is the total number of cards. For large n, c converges to n*log(n). That is, for n = 52, it can reasonably be expected that all 52 cards will be observed only after about 236 turns! The problem is further exacerbated because a fraction of the images obtained in an SFX experiment will be blank because the X-ray pulse did not hit a crystal. This fraction varies depending on the sample preparation and delivery methods (see Chaps. 3–5), but is often higher than 60%. The random orientation of crystals and the random picking of this orientation on every measurement represent the primary reasons why SFX data volumes are inherently larger than rotation series data.The second reason why SFX data volumes are so high is the high variability of many experimental parameters. [There is some randomness in the X-ray pulses themselves]. There may also be a wide variability in the crystals: their size, shape, crystalline order, and even their crystal structure. In effect, each frame in an SFX experiment is from a completely separate experiment to the others."

“The Realities of Experimental Data” "The aim of hit finding in SFX is to determine whether the snapshot contains Bragg spots or not. All the later processing stages are based on Bragg spots, and so frames which do not contain any of them are useless, at least as far as crystallographic data processing is concerned. Conceptually, hit finding seems trivial. However, in practice it can be challenging.

“In an ideal case shown in Fig. 7.5a, the peaks are intense and there is no background noise. In this case, even a simple thresholding algorithm can locate the peaks. Unfortunately, real life is not so simple”

It’s very cool, I wish I knew more about this. A figure I found for approximate data rate is 5GB/s per instrument. I think that’s for the European XFELS.

Citation: [1]: Yoon, C.H., White, T.A. (2018). Climbing the Data Mountain: Processing of SFX Data. In: Boutet, S., Fromme, P., Hunter, M. (eds) X-ray Free Electron Lasers. Springer, Cham. https://doi.org/10.1007/978-3-030-00551-1_7

That’s definitely a non-trivial amount of data. Storage fast enough to read/write that isn’t cheap either, so it makes perfect sense you’d want to process it and narrow it down to a smaller subset of data ASAP. The physics of it is way over my head, but I at least understand the challenge of dealing with that much data.

Thanks for the read!

Probably makes 7 figures working for big pharma though

Unfortunately no. I don’t know any research scientists who even make 6 figures. You’re lucky to break even 50k if you’re in academia. Working in industry gets you better pay, but not by too much. This is true even in big pharma, at least on the biochemical/biomedical research front. Perhaps non-research roles are where the big bucks are.

Last time I did anything on the job with C++ was about 8 years ago. Here’s what I learned. It may still be relevant.

- C++14 was alright, but still wasn’t everything you need. The language has improved a lot since, so take this with a grain of salt. We had to use Boost to really make the most of things and avoid stupid memory management problems through use of smart (ref-counted) pointers. The overhead was worth it.

- C++ relies heavily on idioms for good code quality that can only be learned from a book and/or the community. “RAII” is a good example here. The language itself is simply too flexible and low-level to force that kind of behavior on you. To make matters worse, idiomatic practices wind up adding substantial weight to manual code review, since there’s no other way to enforce them or check for their absence.

- I wound up writing a post-processor to make sense of template errors since it had a habit of completely exploding any template use to the fullest possible expression expansion; it was like typedefs didn’t exist. My tool replaced common patterns with expressions that more closely resembled our sourcecode1. This helped a lot with understanding what was actually going wrong. At the same time, it was ridiculous that was even necessary.

- A team style guide is a hard must with C++. The language spec is so mindbogglingly huge that no two “C++ programmers” possess the same experience with the language. Yes, their skillsets will overlap, but the non-overlapping areas can be quite large and have profound ramifications on coding preferences. This is why my team got into serious disagreements with style and approach without one: there was no tie-breaker to end disagreement. We eventually adopted one after a lot of lost effort and hurt feelings.

- Coding C++ is less like having a conversation with the target CPU and more like a conversation with the compiler. Templates,

const,constexpr,inline,volatile, are all about steering the compiler to generate the code you want. As a consequence, you spend a lot more of your time troubleshooting code generation and compilation errors than with other languages. - At some point you will need

valgrindor at least a really good IDE that’s dialed in for your process and target platform. Letting the rest of the team get away without these tools will negatively impact the team’s ability to fix serious problems. - C++ assumes that CPU performance and memory management are your biggest problems. You absolutely have to be aware of stack allocation, heap allocation, copies, copy-free, references, pointers, and v-tables, which are needed to navigate the nuances of code generation and how it impacts run-time and memory.

- Multithreading in C++14 was made approachable through Boost and some primitives built on top of pthreads. Deadlocks and races were a programmer problem; the language has nothing to help you here. My recommendation: take a page from Go’s book. Use a really good threadsafe mutable queue, copy (no references/pointers) everything into it, and use it for moving mutable state between threads until performance benchmarks tell you to do otherwise.

- Test-driven design and DevOps best-practice is needed to make any C++ project of scale manageable. I cannot stress this enough. Use every automated quality gate you can to catch errors before live/integration testing, as using valgrind and other in-situ tools can be painful (if not impossible).

1 - I borrowed this idea from working on J2EE apps, of all places, where stack traces get so huge/deep that there are plugins designed to filter out method calls (sometimes, entire libraries) that are just noise. The idea of post-processing errors just kind of stuck after that - it’s just more data, after all.

Reminds me of the joke about the guy falling from the top of the Empire State Building who, half way down, was heard saying: “Well, so far, so good”

Reminds me of the start of La Haine.

https://youtu.be/4rD05HsmtIUI suspect indirectly both variants come from the same source or maybe even it’s the La Haine that’s indirectly the source for my variant (though I learned this joke a long time ago, possibly before 1995).

By the way, that’s excellent film intro.

I started to learn C++ once, had semester and couldn’t wrap my head around the object oriented part. At some point I looked at learning objective C on my own, though I didn’t really use it. I had a 1000x better understanding after an hour.

I learned it while at the same time learning (or really enhancing my previous knowledge of) javascript, thanks to an insane mostly-Finnish app development platform known as Qt Creator, which for no rational reason uses C++ for the under-hood-stuff and javascript for the UI front end. Just an absolutely horrible mismatch of mental states. For bonus points, the company that I worked for that used this monstrosity for its suite of apps got purchased by a huge west coast company and the apps were shut down and everybody was fired, after two years of my working on this shit.

Something like ruby is a pretty quick way to get up and running with something easy and object-oriented. Groovy if you already have a jvm running (though ruby might be easier depending upon your background)

Removed by mod

I would assume so. Grails basically died to SpringBoot (which I thought was sad from years ago as I thought grails did some things better), but I mainly have worked in Go for the last 5 years and a lot of PHP and Java in the 5 before that (then Grails, J2EE, Perl, ASP (pre-dot-net), etc. before all that).

In my country C++ is taught as a base language along with Scratch(not a language, but yk what I mean). I recently started learning Kotlin with Jetpack Compose (the only sane way to learn Kotlin) and I realized I wasted two years of my life learning C++, with 5 more to come as it is mandatory in ICT classes… :((

Which programming language would you like to teach if you were a teacher? P.D I also learned C++ as my first language

Idk about other people but just learning c is so logical. You do stupid shit, you get stupid results. Of course there are a lot of bad things with c but at least when you sit down to understand how it works, it works while most oop languages are so detached from the hardware its hard to understand anything. It might be just me but oop breaks my brain. Also ive never coded in c++ but i automatically avoided it. I heard rust has very minimal oop and its just to make things smoother so i may try that.

C++ was my second programming language after BASIC, if that still qualifies as a programming language these days.

I’m not a teacher, and I don’t want to become one tbh.

That said, something like Python is standard, and for good reason IMO. For OOP they usually teach Java here, though I’m not a huge fan. I think Kotlin would be better to teach nowadays. There are other OO languages of course, but I’m of the opinion that after messing around with Python, students should probably use something strongly typed, so that’s JavaScript out - I suppose TypeScript could be used, but IMO it’d be best to keep JS/TS in a web dev specific course.

In hungary its python and c++ in the curriculum but on the tests you can usually choose between a few languages.

I learned c from a book from the 80s and then skipped to rust.

The only time I touched c++ was modding games in the early aughts and to try it for a couple coding challenges. I’ve heard templates are a thing of note when it comes to complications but not sure.

As for c# … We don’t talk about that (jk. I had to do it for one or two projects and played with unity a bit ages ago)

deleted by creator

Probably. I think I still have the book in storage back in the US. At some point, I also got “learn c in 24 hours” or something as well.

Honestly C# has grown on me quite a bit. Shakes off some of the bloat of Java and linq is pretty handy. God knows if I can’t tell you what the distinction is between C# and .NET Core and whatever the hell ASP is.

- C# = Java (the language itself)

- .NET (Core) = Oracle JDK (a runtime and std lib implementation for the language)

- ASP.NET = Spring boot (the default web framework)

Thank you!!

Instructions unclear, attempted to learn JS

I actually just started learning C++ today.

If Lovecraft were alive today one of his stories would start with this line.

We used C++ based software. Who need sanity ? Clearly overrated