- cross-posted to:

- [email protected]

- [email protected]

- cross-posted to:

- [email protected]

- [email protected]

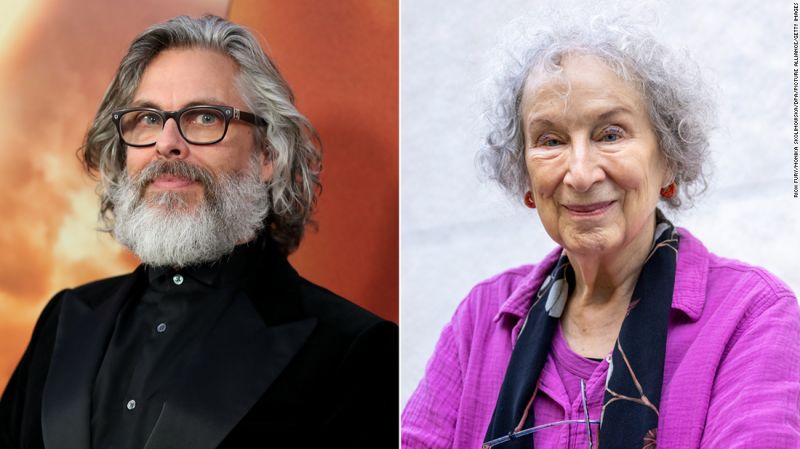

Thousands of authors demand payment from AI companies for use of copyrighted works::Thousands of published authors are requesting payment from tech companies for the use of their copyrighted works in training artificial intelligence tools, marking the latest intellectual property critique to target AI development.

It’s just a really big autocomplete system. It has no thought, no reason, no sense of self or anything, really.

I guess I agree with some of that. It’s mostly a matter of definition though. Yes, if you define those terms in such a way that AI cannot fulfill them, then AI will not have them (according to your definition).

But yes, we know the AI is not “thinking” or “scheming”, because it just sits there doing nothing when it’s not answering a question. We can see that no computation is happening. So no thought. Sense of self… probably not, depends on definition. Reason? Depends on your definition. Yes, we know they are not like humans, they are computers, but they are capable of many things which we thought only humans could do 6 months ago.

Since we can’t agree on definitions I will simply avoid all those words and say that state-of-the-art LLMs can receive text and make free form, logical, and correct conclusions based upon that text at a level roughly equal to human ability. They are capable of combining ideas together that have never been combined by humans, but yet are satisfying to humans. They can invent things that never appeared in their training data, but yet make sense to humans. They are capable of quickly adapting to new data within their context, you can give them information about a programming language they’ve never encountered before (not in their training data), and they can make correct suggestions about that programming language.

I know you can find lots of anecdotes about LLMs / GPT doing dumb things, but most of those were GPT3 which is no longer state-of-the-art.