Not a fan of disinformation so I’ll post this here as well:

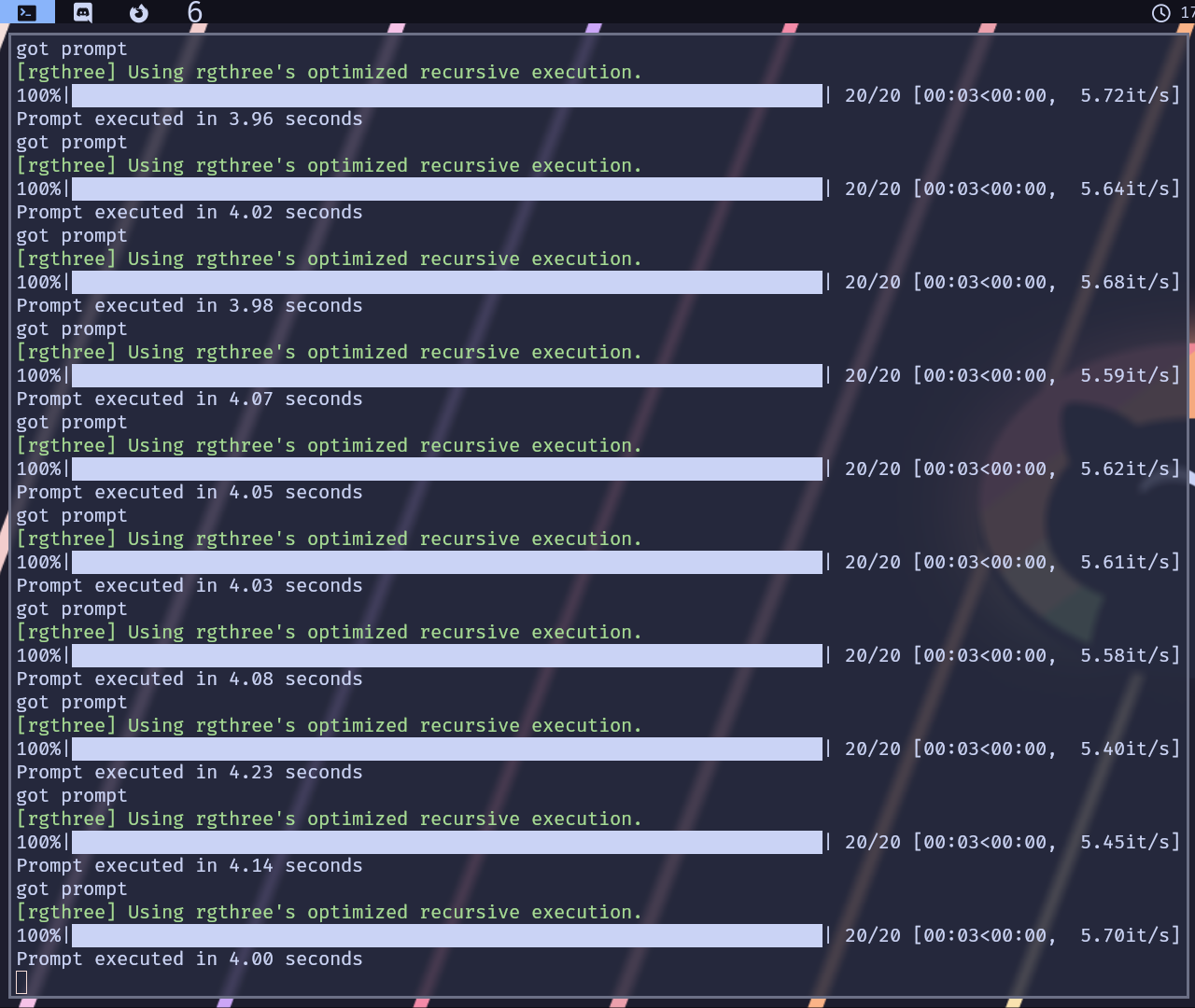

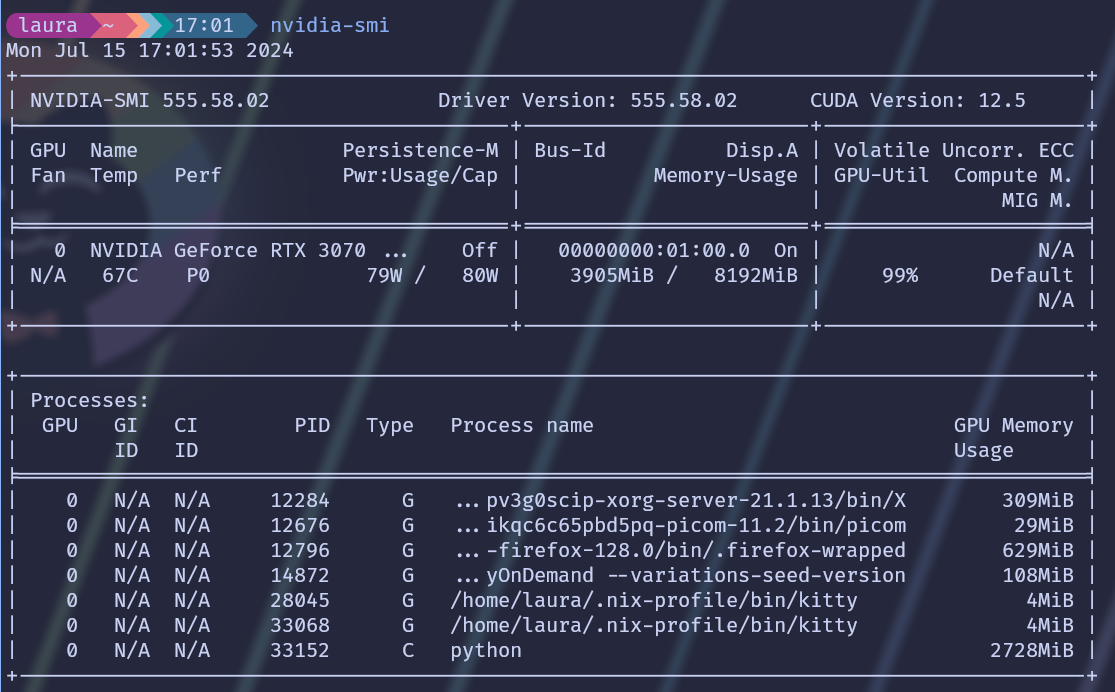

I run ComfyUI locally on my own Laptop and generating an image takes 4 seconds, during which my 3070 Laptop GPU uses 80 Watts (the maximum amount of power it can use).

It also fully uses one of the 16 threads of my i7-11800H (TDP of 45W). Let’s overestimate a bit and say it uses 100% of the CPU (even though in reality it’s only 6.25%), which adds 45 watts resulting in 125 watts (or 83 watts if you account for the fact that it only uses one thread).

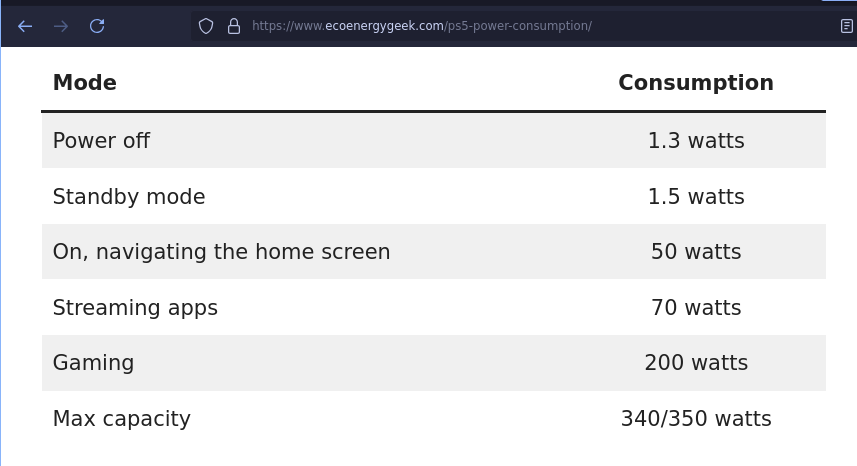

That’s 125 watts for 4 seconds for one image, or about 0.139 WH (0.000139KWH). That would be 7200 images per KWH. Playing one hour of Cyberpunk on a PS5 (assuming 200 watts) would be equivalent to me generating 1440 images on my laptop.

Not a fan of disinformation so I’ll post this here as well:

I run ComfyUI locally on my own Laptop and generating an image takes 4 seconds, during which my 3070 Laptop GPU uses 80 Watts (the maximum amount of power it can use). It also fully uses one of the 16 threads of my i7-11800H (TDP of 45W). Let’s overestimate a bit and say it uses 100% of the CPU (even though in reality it’s only 6.25%), which adds 45 watts resulting in 125 watts (or 83 watts if you account for the fact that it only uses one thread).

That’s 125 watts for 4 seconds for one image, or about 0.139 WH (0.000139KWH). That would be 7200 images per KWH. Playing one hour of Cyberpunk on a PS5 (assuming 200 watts) would be equivalent to me generating 1440 images on my laptop.

“Sources”:

https://www.ecoenergygeek.com/ps5-power-consumption/

https://www.ecoenergygeek.com/ps5-power-consumption/