Someone asked about how llms can be so good at math operations. My response comment kind of turned into a five paragraph essay as they tend to do sometimes. Thought I would offer it here and add some reference. Maybe spark some discussion?

What do language models do?

LLMs are trained to recognize, process, and construct patterns of language data into high dimensional manifold plots.

Meaning its job is to structure and compartmentalize the patterns of language into a map where each word and its particular meaning live as pairs of points on a geometric surface. Its point is placed near closely related points in space connected by related concepts or properties of the word.

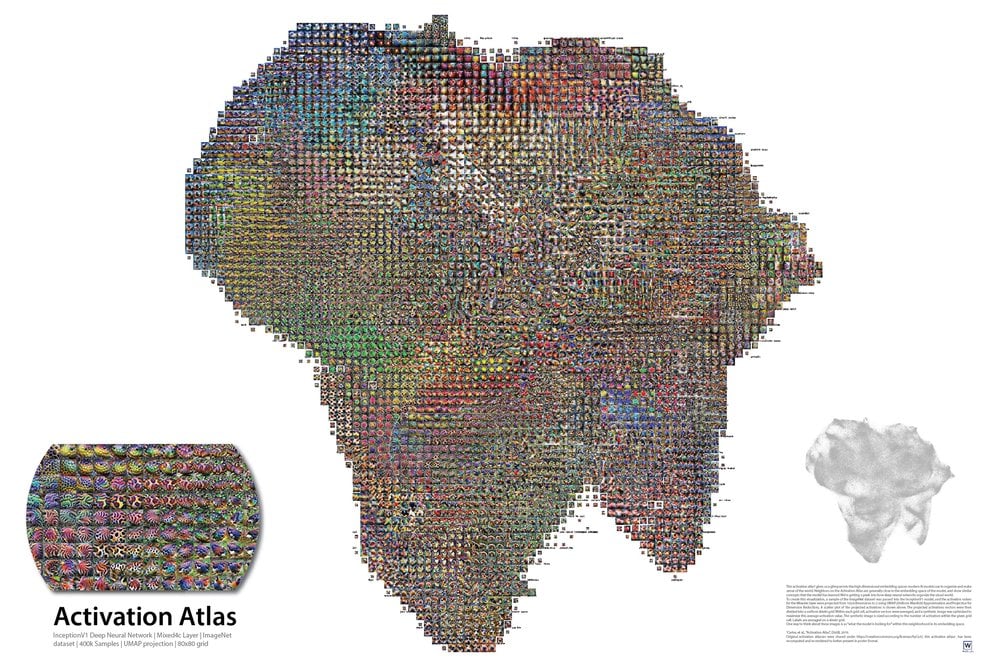

You can explore such a map for vision models here!

Then they use that map to statistically navigate through the sea of ways words can be associated into sentences to find coherent paths.

What does language really mean?

Language data isnt just words and syntax, its underlying abstract concepts, context, and how humans choose to compartmentalize or represent universal ideas given our subjective reference point.

Language data extends to everything humans can construct thoughts about including mathematics, philosophy, science storytelling, music theory, programming, ect.

Language is universal because its a fundimental way we construct and organize concepts. The first important cognative milestone for babies is the association of concepts to words and constructing sentences with them.

Even the universe speaks its own language. Physical reality and logical abstractions speak the same underlying universal patterns hidden in formalized truths and dynamical operation. Information and matter are two sides to a coin, their structure is intrinsicallty connected.

Math and conceptual vectors

Math is a symbolic representation of combinatoric logic. Logic is generally a formalized language used to represent ideas related to truth as well as how truth can be built on through axioms.

Numbers and math is cleanly structured and formalized patterns of language data. Its riggerously described and its axioms well defined. So its relatively easy to train a model to recognize and internalize patterns inherent to basic arithmetic and linear algebra and how they manipulate or process the data points representing numbers.

You can imagine the llms data manifold having a section for math and logic processing. The concept of one lives somewhere as a point of data on the manifold. By moving a point representing the concept of one along a vector dimension that represents the process of ‘addition by one’ to find the data point representing two.

Not a calculator though

However an llm can never be a true calculator due to the statistical nature of the tokenizer. It always has a chance of giving the wrong answer. In the infinite multitude of tokens it can pick any number of wrong numbers. We can get the statistical chance of failure down though.

Its an interesting how llms can still give accurate answers for artithmatic despite having no in built calculation function. Through training alone they are learning how to apply simple arithmetic.

hidden structures of information

There are hidden or intrinsic patterns to most structures of information. Usually you can find the fractal hyperstructures the patterns are geometrically baked into in higher dimensions once you go plotting out their phase space/ holomorphic parameter maps. We can kind of visualize these fractals with vision model activation parameter maps. Welch labs on yt has a great video about it.

Modern language models have so many parameters with so many dimensions the manifold expands into its impossible to visualize. So they are basically mystery black boxes that somehow understand these crazy fractal structures of complex information and navigate the topological manifolds language data creates.

conclusion

This is my understanding of how llms do their thing. I hope you enjoyed reading! Secretly I just wanted to show you the cool chart :)

Are they good at math, though? I can get ChatGPT to add numbers. But every division and other operation seems to give a wrong result almost every time. I’d rather ask why they’re so bad at maths. And that’s because they aren’t calculators. And due to their nature, it takes them quite some effort to get 53828/28 wrong, which the underlying CPU could calculate in a few nanoseconds. So they aren’t meant to replace calculators…

So, a few weeks ago I was cooking and needed 3 liters of water. My largest measuring cup is 500ml and I couldn’t be bothered to fill it 6 times so I had the brilliant idea of asking chatgpt how high should I fill a pot that’s 22cm in diameter to get 3 liters because I thought it’d be faster than doing the math myself. It told me 7 meters.

I hear that OpenAI and ChatGPT have been smoked by the competition for awhile now. I wouldnt know, I only run open models local on an old desktop computer.

Here’s what the local deepseek distill of qwen2.5-14b has to say about your question. Its been awhile since I had to do volume equations but its answer seems about right, 7.89 cm sounds closer to the right answer than 7 meters anyway lol.

Question: how high should I fill a pot that’s 22cm in diameter to get 3 liters?

Answer: "Alright, let’s tackle this problem. The user wants to know how high they should fill a pot that’s 22 cm in diameter to get 3 liters of water.

First, I need to understand what exactly is being asked here. It seems like they’re trying to determine the height of water when poured into a pot with a specific diameter, such that the volume of water is 3 liters.

Okay, so the pot can be assumed to be cylindrical since pots are typically round and symmetrical. That makes sense because the diameter is given, and we can model it as a cylinder.

Now, the formula for the volume of a cylinder is:

V = pi r^2 h

Where: ( V ) is the volume, ( r ) is the radius, ( h ) is the height.

The user provided the diameter, which is 22 cm. Since the radius is half of the diameter, the radius ( r ) would be 11 cm.

The volume they want is 3 liters. I know that 1 liter is equal to 1000 cubic centimeters (cm³), so 3 liters would be 3000 cm³.

So, plugging in the values we have:

3000 = pi (11)^2 h

Calculating ( 11^2 ):

11^2 = 121

So now the equation becomes:

3000 = pi times 121 times h

To solve for ( h ), we’ll divide both sides by ( pi times 121 ):

h = 3000/( pi times 121 )

Calculating the denominator first:

pi approx 3.1416

3.1416 times 121 = approx 380.132

Now, dividing 3000 by 380.132:

h = 3000 divided by 380.132 = approx 7.89 cm

So, the height of the water should be approximately 7.89 cm to get 3 liters.

Since it’s not practical to measure 0.89 cm precisely, rounding up to 8 cm would ensure there’s enough water, but if precision is important, sticking with 7.89 cm is better.

Therefore, the pot should be filled to about 7.89 cm high to hold exactly 3 liters.

math and arithmetic are 2 different things. Most people suck at both.

Arithmetics is math. I don’t know what you’re trying to say.

adding numbers and abtracting a concept require 2 different types of intelligence

No, they don’t. Early arithmetic skill is a very strong predictor of high-level math skill. Despite what you might have heard from the math majors at your uni, mathematicians aren’t bad at arithmetics. Don’t base your opinions off of memes.

Lol. I hope you took that as an invitation to excercise your brain. I mean not everyone needs to know this… But it’s fairly simple maths. A cylinder has a volume of πr²h. So it’s 3000/(3.14x11x11) or bit less than 8cm.

The smart method is to use your kitchen scale and just weigh stuff. That’s usually easier and qucker with most ingredients. And you generally get less measuring cups dirty. Kinda depends on who wrote your recipes, though. Mine usually come with measurings in weight. Whereas very old recipe books and American people often measure in volume. And the kitchen scale won’t really help there, unless you memorize the density of flour and butter 😅

But seriously, weighing 3kg of water is far better than sticking your ruler into the soup and see if it levels out at 7.9cm…

I got pots with a liter scale engraved inside. That’s very nice to have.

But I seriously have to try some more mundane stuff with ChatGPT. Like this. Or ask it how to hard-boil my eggs…

I did end up doing it myself. Only tried chatgpt because that’s where I got the recipe I was following and thought it’d save me some time. I didn’t expect it to screw up a simple division. Weighting the water seems awfully cumbersome, though.

More importantly, what was the recipe and was it any good?

It was good but unfortunately I didn’t save it because I had no intention of eating it again. Nothing to do with the quality of the recipe, I just had to get rid of 300g of fettuccine and half a kilo of ground pork and I don’t think I’ll be in that situation again. It basically told me to season the pork with Italian seasoning, chop onion, garlic and tomatoes, throw everything in a pan with the pasta and top with mozarella.

Thanks for the answer! I hadn’t thought about asking for recipes based on the specific ingredients you have left.

They will never be as efficient or as certain as calculators, absolutely. Even with function calling its better to just use calculators for calculation. Just like they will never replace a definitive database for factual information calling like wolframalpha.

It is surprising that despite not having built in functions for calculation they still seem to understand algebra concepts, try to work with numbers, and are getting better in accuracy over time just through training. A calculator cant reason about what godels incompleteness theorem implies about the scientific method, but a llm can try to simulate reasoning about high level math concepts to generate potential relationships between information.

They are surprisingly good at math operations requiring accuracy when considering how much worse they were at it a few years ago. Not sure if you tried the newest models but deepseek can really keep up with consistent notation across operations which is a big win in itself.

I agree. Though I don’t think I’m surprised how good they are. I’ve just tried ChatGPT and Llama3 for now. And they struggle with literally everything. Comparing numbers, dividing them, multiplicating them… Division so far had a 100% failure rate for me. I think that model just memorized the basic multiplication tables and a few common additions and subtractions. I don’t see it getting the concepts. But I’ll try DeepSeek as you suggested and see how good it does. I doubt it’s far off but I’ll have to check. And I’ll try some middle school maths. Maybe it’s better at reasoning and transforming equations.

(Edit: But I’ve read a bit about AI doing science, inferring things, reasoning etc. And I think it’s a valid use case. Also they sometimes deliver promising results with word problems. Or like recognizing integrals and doing something with them that goes into the right direction. I just wonder if that has any realy world application if they can’t multiply or divide the numbers therein. Unless it’s a number that an elementary school kid can multiply… But you’re right. We can mitigate for that by connecting it to a calculator or proper mathematics suite.)

There is a difference between arithmetic and MATH.

They all can’t do division (because of tokenization) but deepseek can’t pretty accurately explain how to change the basis for a linear vector system

Yeah I know. I just didn’t feel like proceeding to proper math if it can’t even do arithmatic. But seems you’re all right. It can do things like “Simplify (2+r)²-(2-r)² give a step by step solution.” Guess I’m having too many applied problems or engineering challenges, which usually include some arithmetic. Or numbers that exceed the usual single digit textbook examples that cancel out in the process, because the numbers have been chosen deliberately.

deleted by creator

theyre not good at math. But its still impressive that they can mostly get addition right at all, considering theyre a glorified predictive keyboard. And thats what this post is getting at.

Any old calculator with a lookup table is better than an LLM at math

There is a difference between arithmetic and Maths, LLMs cannot do arithmetic, but the thinking models are really quite good at mathematics these days.

I wanted to divide my work out weighs by 4 so that I have 2 dumbbells with the same weight on both sides. Only after I said “hey, this is not possible” it said “you are correct! This is not possible”

TIL, llm is just a big virtual cat

I thought it was a tooth

This looks like Africa on left south America on right