New-ish to Haskell. Can’t figure out the best way to get Cassava (Data.Csv) to do what I want. Can’t tell if I’m missing some haskell type idioms or common knowledge or what.

Task: I need to read in a CSV, but I don’t know what the headers/columns are going to be ahead of time. The user will provide input to say which headers from the CSV they want processed, but I won’t know where (index-wise) those columns will be in the CSV, nor how many total columns there will be (either specified by the user or total). Say I have a [] which lists the headers they want.

Cassava is able to read CSVs with and without headers.

Without headers Cassava can read in entire rows, even if it doesn’t know how many columns are in that row. But then I wouldn’t have the header data to filter for the values that I need.

With headers Cassava requires(?) you to define a record type instantiating its FromNamedRecord typeclass, which is how you access parts of the column by name (using the record fields). But in order for this to be well defined you need to know ahead of time everything about the headers: their names, their quantity, and their order. You then emulate that in your record type.

Hopefully I’m missing something obvious, but it feels a lot like I have my hands tied behind my back dealing with the types provided by Cassava.

Help greatly appreciated :)

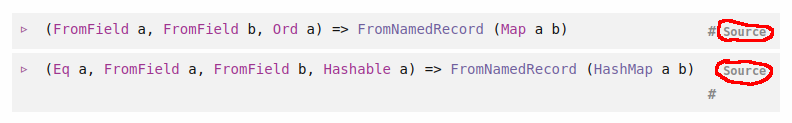

You could use the instances for parsing the data into a list of maps or hashmaps. At the bottom of the

FromNamedRecordclass, you can see that these instances are already specified in the library.If you need the data in a different format, converting them from a map might be easier than to write your own parser, depending on your abilities and requirements.

Ah yeah thanks that works! I didn’t think to look for already pre-defined instances of the typeclass. Though I still wonder how the Parser for Map/HashMap is defined, since Maps can hold an arbitrary number of values rather than a record’s fixed number of fields.

I also wonder why (it seems) I need to put a type annotation that I want to decode the incoming data as a Map, since you don’t have to do that if you call e.g. a field accessor method on one of your custom record instances. That is, if you try to call

Map.sizeon an incoming CSV record, you need to explicitly annotate with a type annotation that you want that record decoded as a Map. Whereas if you have a recorddata Foo = Foo {bar :: String}and define its parser in the standard way, you can just callbaron the incoming CSV record and it will work, no annotation required.You can check the source code of nearly any class, instance, or function by clicking on the grey

Sourcelink on the right:

You need to add type annotations for the map since with

Map.sizehaskell can’t infer the types of the values inside the map. But for the deserialisation process, haskell needs to know the type inside the map. If you have a record, the types are already specified there.Right

Mapis polymorphic while records are concrete, make sense.Those parser definitions in the source are enlightening, I see now I was thinking a bit narrowly about how e.g.

parseNamedRecordcould be instantiated for a type.

I realize that this was posted a couple weeks ago, so hopefully this is still helpful. One thing just to point out is that there’s

(FromField a, FromField b, Ord a) => FromNamedRecord (Map a b).That will just parse the CSV to a map, indexed by the field name. You might want a

Map String String?Yep thanks that’s exactly what I ended up doing! In my case it was easiest to work with

Map Text Text.

Types are erased; they don’t exist are runtime. So, if the user is going to provide input at runtime to determine the processed data, you don’t select that data via types (or type class instances).

You probably just want to have Cassava give you a

[[Text]]value (FromRecord [Text]is provided by the library, you don’t have to write it), then present the header line to the user, then process the data based on what they select. Just do the simple thing.If the CSV file is too big to fit into memory, you might use Incremental module to read just the first record as a

[], present that to the user, and then use their input to guide the rest of the processing. But, I wouldn’t mess with that until after you have the small case worked out fairly well first.I should have been clearer that it’s initial input to the program, not queried input during the middle of its runtime.

I think what’s easiest is reading in the named records as hashmaps and dealing with them that way, so that I can do filters/comparisons on the keys (which are the headers). I don’t need anything more specialized that warrants creating a new

FromRecordinstance.

Whenever I see “CSV parsing” I always think “import to sqlite”.

I’ve never used Haskell, is this appropriate?I’ve never worked with Cassava or Haskell, but I’ve done a lot of CSV processing.

Is there a way to just go ahead and read in everything (stop after a dozen or so rows), let the user select what they want, then go ahead and do the real import or processing? That has always been my main tactic across a variety of languages. To minimize user effort, I allowed them to save their choices so that they could just select a saved pattern the next time they got data from that source. Even better, CSVs usually have some kind of consistent pattern in their file names that can be leveraged to recommend or even automatically use a saved pattern.

Of course, that depends on being able to define “Record Types” on the fly from what the user selects/saves. I can’t imagine that being a problem, but, as I said, I’ve never used Haskell or Cassandra.