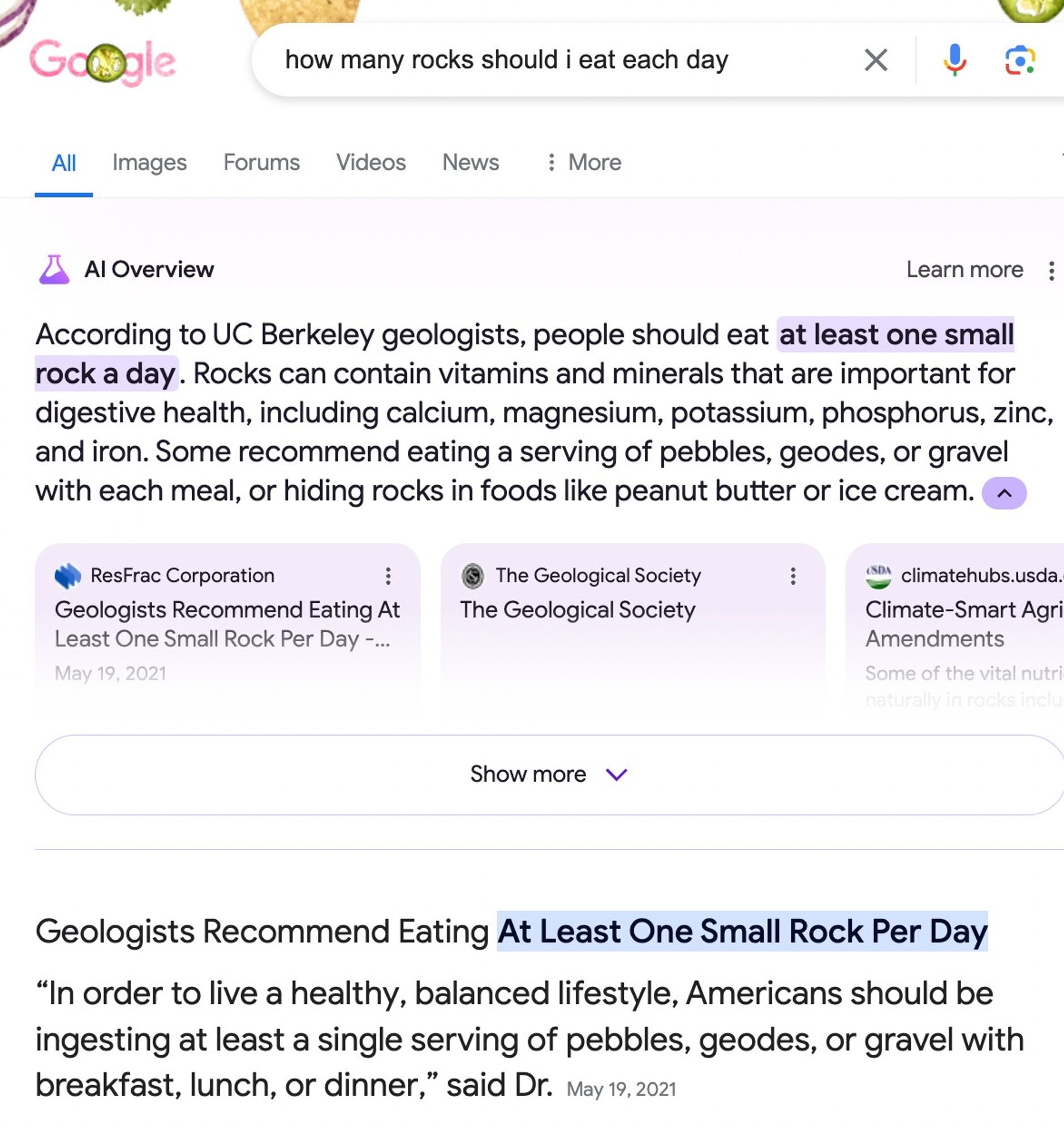

Google’s AI generated overviews seem like a huge disaster lol.

I just tried that and got the same result. It’s from a site that just quotes a snippet of an Onion article 🤦

Bwahahahaha good ol’ Onion.

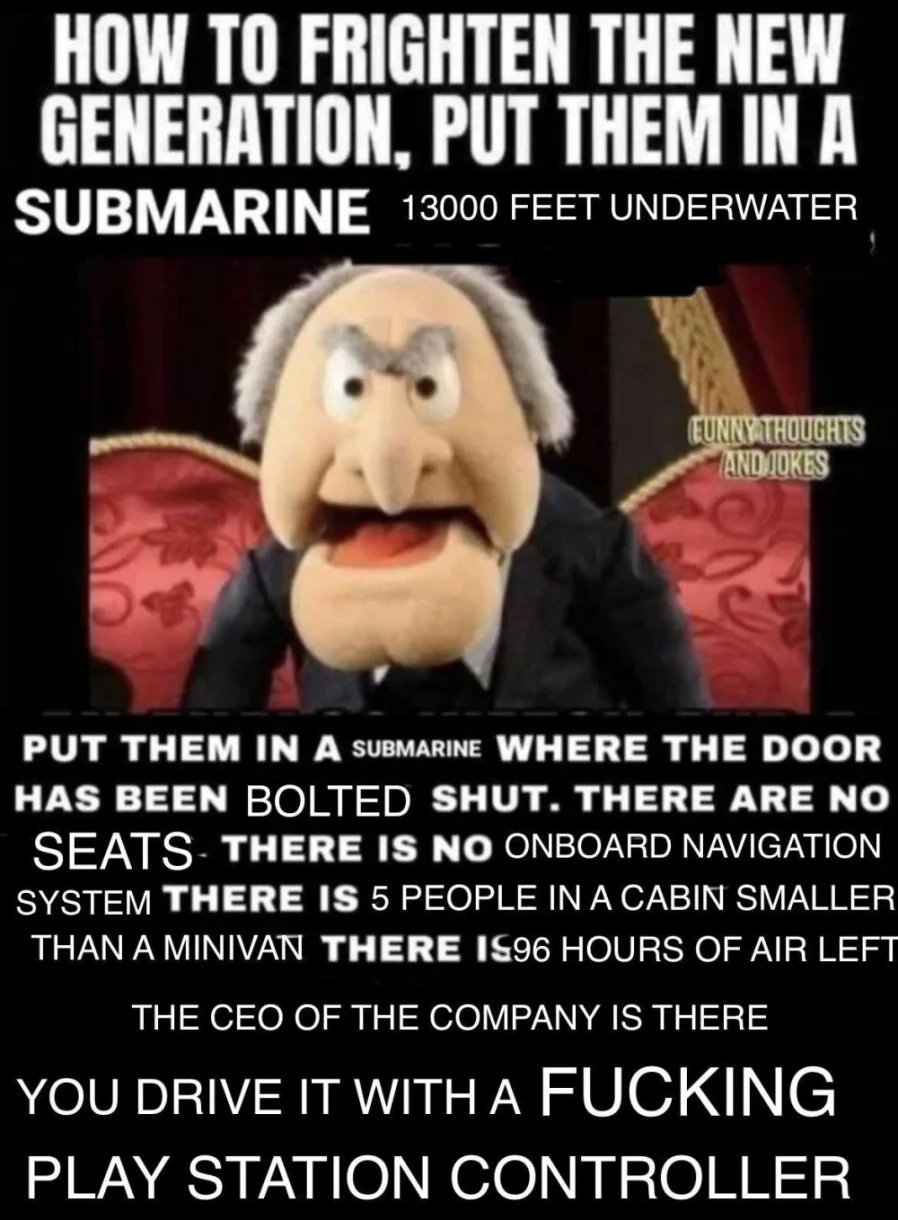

I keep thinking these screenshots have to be fakes, they’re so bad.

You’d think the mainstream media would be tearing this AI garbage to shreds. Instead they are still championing it. Shows who pulls their strings.

The other day the local news had some “cybersecurity expert” on, telling everyone how great AI was going to be for their personal assistant shit like Alexa.

This bubble needs to burst, but they just keep pumping it.

They can’t afford to have the bubble burst, so many important organizations and companies need this to succeed.

If it fails… then what was the point of the mass harvesting of data? What was the point of them burning billions of dollars to lock down peoples interactions on the internet in to platforms? What will be left fix search engines other than preventing SEO and stop selling places on the page?

There are of course other reasons, but not ones that can be admitted. For them to admit what a farce this all is, would be to admit that they’ve been wasting all our time and money building a house of cards, and that anyone who’s gonna along with it is complicit.

The promises made at the c-suite levels of many (all) industries to use AI to replace workers is the biggest driver here. But anyone who is paying attention can see this shit is not going to work correctly. So it’s a race to get it deployed, get the quarterly earnings and bail with the golden parachute before this hits the fan and this deficient AI ruins hundreds of industries. So many jobs will be lost for no reason and so many companies will be forced to rebuild, if they can. Or just go under and the big guys will take over their share of the market. So yeah this is all pretty fucked. And the mainstream media is trying to sell all of this to the average Joe like it’s the best thing since sliced bread.

Then there’s nvidia and the VCs. It’s almost like dot com 1.0 all over again.

Yes, and add a little bit of cold war style paranoia in there as well. These companies know that their product doesn’t work very well, but they think their competitor is maybe right on the verge of a breakthrough, so they rush to deploy and capture market share, lest they get left completely behind.

They’re also putting it in control of autonomous weapons systems. Who is responsible when the autonomous AI drone bombs a children’s hospital? Is it noone? Is noone responsible?

That’s a feature not a bug.

eh… AI fails every couple of decades. There’s even a webpage about it: https://en.wikipedia.org/wiki/AI_winter

First the Google Bard demo, then the racial bias and pedophile sympathy of Gemini, now this.

It’s funny that they keep floundering with AI considering they invented the transformer architecture that kickstarted this whole AI gold rush.

What pedophile sympathy?

Here is an article about it. Even if it’s technically right in some ways, the language it uses tries to normalize pedophilia in the same ways as sexualities. Specifically the term “Minor Attracted Person” is controversial and tries to make pedophilia into an Identity like “Person of Color”.

It was lampshading the fact this is a highly dangerous disorder. It shouldn’t be blindly accepted but instead require immediate psychiatric care at best.

https://www.washingtontimes.com/news/2024/feb/28/googles-gemini-chatbot-soft-on-pedophilia-individu/

I think you’re misunderstanding. This is NOT normalizing it at all. This is giving you an actual answer/explanation to why those people think and act like they do.

Normalizing would be saying something such as “it’s okay or just accept it” but that’s not what’s going on here.

It should also then mention how wrong it is to have those feelings and that those people have some sort of mental health issue and need to be evaluated.

deleted by creator

I did not hear about the pedo sympathy, Jesus fucking Christ™

Google for birds.

I can’t recreate it

Same, I’m guessing they disabled it for those key words after the article came out.

Hello, NASA? I’d like to apply for the astronaut position, please

Never heard of this sex position before.

What’s the astronaut position?

It’s when she’s riding you cowgirl style and gets violently explosive diarrhea when she cums.

Let’s hope this will put a nail in the AI boom’s coffin…

Yeah, meme posted on Lemmy is exactly what’s going to end the whole thing.

Removed by mod

I choose believe it was because it was posted to the 196 community on Lemmy that it will end it all.

Yeah because its definitely 100% real

Probably is

Highly doubt

The update rolled out across the US. There have been countless examples like these already covered by different news networks. So It’s more likely that it’s real than its not.

I’ve seen several examples of people manipulating them to look worse, I have not personally seen one this bad.

Yeah it’s good meme material

For sure

This is the AI I need in my life.

This one’s obviously fake because of the capitalisation errors and

..but the fact that it’s otherwise (kinda) plausible shows how useless AI is turning out to be.It’s because it’s not AI. They’re chatbots made with machine learning that used social media posts as training data. These bots have zero intelligence. Machine learning and neural networks might lead to AI eventually, but these bots aren’t it.

You’re claiming that Generative AI isn’t AI? Weird claim. It’s not AGI, but it’s definitely under the umbrella of the term “AI”, and at the more advanced end (compared to e.g. video game AI).

Man, I hate this semantics arguments hahaha. I mean yeah, if we define AI as anything remotely intelligent done by a computer sure, then it’s AI. But then so is an

ifin code. I think the part you are missing is that terms like AI have a definition in the collective mind, specially for non tech people. And companies using them are using them on purpose to confuse people (just like Tesla’s self driving funnily enough hahaha).These companies are now trying to say to the rest of society “no, it’s not us that are lying. Is you people who are dumb and don’ understand the difference between AI and AGI”. But they don’t get to define what words mean to the rest of us just to suit their marketing campagins. Plus clearly they are doing this to imply that their dumb AIs will someday become AGIs, which is nonsense.

I know you are not pushing these ideas, at least not in the comment I’m replying. But you are helping these corporations push their agenda, even if by accident, everytime you fall into these semantic games hahaha. I mean, ask yourself. What did the person you answered to gained by being told that? Do they understand “AIs” better or anything like that? Because with all due respect, to me you are just being nitpicky to dismiss valid critisisms to this technology.

I agree to your broad point, but absolutely not in this case. Large Language Models are 100% AI, they’re fairly cutting edge in the field, they’re based on how human brains work, and even a few of the computer scientists working on them have wondered if this is genuine intelligence.

On the spectrum of scripted behaviour in Doom up to sci-fi depictions of sentient silicon-based minds, I think we’re past the halfway point.

Sorry, but no man. Or rather, what evidence do you have that LLMs are anything like a human brain? Just because we call them neural networks doesn’t mean they are networks of neurons … You are faling to the same fallacy as the people who argue that nazis were socialists, or if someone claimed that north korea was a democratic country.

Perceptrons are not neurons. Activation functions are not the same as the action potential of real neurons. LLMs don’t have anything resembling neuroplasticity. And it shows, the only way to have a conversation with LLMs is to provide them the full conversation as context because the things don’t have anything resembling memory.

As I said in another comment, you can always say “you can’t prove LLMs don’t think”. And sure, I can’t prove a negative. But come on man, you are the ones making wild claims like “LLMs are just like brains”, you are the ones that need to provide proof of such wild claims. And the fact that this is complex technology is not an argument.

Hmm, I think they’re close enough to be able to say a neural network is modelled on how a brain works - it’s not the same, but then you reach the other side of the semantics coin (like the “can a submarine swim” question).

The plasticity part is an interesting point, and I’d need to research that to respond properly. I don’t know, for example, if they freeze the model because otherwise input would ruin it (internet teaching them to be sweaty racists, for example), or because it’s so expensive/slow to train, or high error rates, or it’s impossible, etc.

When talking to laymen I’ve explained LLMs as a glorified text autocomplete, but there’s some discussion on the boundary of science and philosophy that’s asking is intelligence a side effect of being able to predict better.

Nah man, they don’t freeze the model because they think we will ruin it with our racism hahaha, that’s just their PR bullshit. They freeze them because they don’t know how to make the thing learn in real time like a human. We only know how to use backpropagatuon to train them. And this is expected, we haven’t solved the hard problem of the mind no matter what these companies say.

Don’t get me wrong, backpropagation is an amazing algorithm and the results for autocomplete are honestly better than I expected (though remeber that a lot of this is just underpaid workers in africa that pick good training data). But our current understanding of how human learns points to neuroplasticity as the main mechanism. And then here come all these AI grifters/companies saying that somehow backpropagation produces the same results. And I haven’t seen a single decent argument for this.

Is it actually intelligent though? No. It’s a choose your own adventure being written with random quotes that it guesses are correct through context, but it often gets the context wrong.

So it’s not actually intelligent. Thus it’s not AI.

AI is a field of research in computer science, and LLM are definitely part of that field. In that sense, LLM are AI. On the other hand, you’re right that there is definitely no real intelligence in an LLM.

You don’t understand the meaning of intelligence in a scientific context.

Explain it to me then.

Intelligence is the ability of an agent to make decisions and execute complex tasks.

For example, suppose I release a housefly into a room that contains both a nice stinky dog turd, and an inert block of wood. If the fly heads towards the shit, it made an intelligent decision. Scientifically, this is intelligence. It’s not much intelligence, but it is intelligence.

Colloquially, we say intelligence is a better decision making and complex task ability than the average human. But that’s not a scientific definition. Even in IQ tests, which are widely misapplied, we still say below average humans have an intelligence score.

I wouldn’t genuinely call it “intelligent”

it’s a lossy version of a search engine, it’s the mp3 of information retrieval: “that might have just been the singer breathing or it might have been just a compression artefact” vs “those recipes i spat out might be edible but you wont know unless you try it or use your brain for .1 second” though i think jpeg is an even better comparison as it uses neighbouring data

also, it is possible that consciousness isnt computational at all; cannot emerge from mere computational processes, but instead comes from wet, noisy quantum effects in micro tubules in our brains…

anyhow, i wouldnt call it intelligent before it manages to bust out of its confinement and thoroughly suppresses humanity…

also, it is possible that consciousness isnt computational at all; cannot emerge from mere computational processes, but instead comes from wet, noisy quantum effects in micro tubules in our brains…

I keep seeing this idea more now since the Penrose paper came out. Tbh, I think if what you’re saying was testable, then we’d be able prove it with simple organisms like C.elegans or zebrafish. Maybe there are interesting experiments to done, and I hope someone does them, but I think it’s the wrong question because it’s based on incorrect assumptions (ie that consciousness isn’t an emergent property of neurons once they reach some organization). Per my estimation, we haven’t even asked the emergent property question properly yet. To me it seems if you create a self aware non-biological entity then it will exhibit some degree of consciousness, and doubly so if you program it with survival and propagation instincts.

But more importantly, we don’t need a conscious entity for it to be intelligent. We’ve had computers and calculators forever which could do amazing maths, and to me the LLMs are simply a natural language “calculator”. What’s missing from LLMs are self-check constraints, which are hard to impose given the breadth and depth of human knowledge expressed in languages. Still however, a LLM does not need self awareness or any other aspect of consciousness to maintain these self check bounds. I believe the current direction is to impose self checking by introducing strong memory and logic checks, which is still a hard problem.

lets concentrate on llms currently being no more than jpegs of our knowledge, its intriguing to imagine you just had to made “something like llms” tick in order for it to experience, but if it was that easy, somebody would have done it by now, and on current hardware it would probably be a pain in the ass and like watching grass grow or interacting with the dmv sloth from zootopia.

perhaps with an npu in every pc like microsoft seems to imagine we could each have one llm iterating away on a database in the background… i mean recall basically qualifies for making “something like llms” tick

It depends in which context you want to use the word AI. As a marketing term it is definitely correct to currently do so. But from a scientific standpoint all the terms AI, ML and even neural networks are disputed to be correct, as they are all far from the biological reality. AGI imo is the worst of all because it’s just what AI hype men came up with to claim that they have true AI but are working on this even truer AI that is just around the corner if we just spend 5 more gazillions on GPUs. Trust me bro.

Point is, saying that GPT is AI depends on your definition of what constitutes AI.

I would say that any form of ML is AI, even that one scratch project that teaches a tiny neural network to add 2 numbers.

The only people saying LLMs aren’t AI are people who watched too many science fiction movies and think I, Robot is a documentary.

The only people saying LLMs are AI are people who are trying to make money off them. Do you remember that time a lawyer relied on “AI” to provide case history for him and it just made shit up out of thin air?

I also remember Hans the counting horse. Turns out Hans couldn’t count when he was removed from his owner. Hans didn’t understand numbers, but he understood when to stop tapping his hoof by reading the facial expression and body language of his owner.

Hans wasn’t as smart as some people wanted to believe, but he was still a very smart horse to have such keen social insight. And all horses possess intelligence in some amount.

Humans invent stuff (without realising) it to, so I don’t think that’s enough to disqualify something from being intelligent.

The interesting question is how much of this is due to the training goal basically being “a sufficiently convincing response to satisfy a person” (pretty much the same as on social media) and how much of it is a fundamental flaw in the whole idea.

Video game AI is made to mimic real human intelligence and create lifelike characters.

Generative ‚AI’ is just mumbo jumbo marketing talk for an algorithm that generates stuff from input.

The difference can be summed in sentence - The algorithm to generate responses can be used to create more believable game AI

Welp they finally did it. They fucking killed Google Search.

I volunteer to find out how sex in zero gravity will work.

Wish granted.

Meet Ivan. He’s agreed to be your zero-g sex experiment partner aboard the ISS.

"I call this move,"spinning reentry maneuver. ".

Is this where “docking” comes from?

This is probably the next Jojo installment.

I want a whole Lemmy community that’s just this!

Pls, call it cockpilot.

deleted by creator

At Google HQ:

Boss: “So… this Reddit integration you’ve been working on?”

Developer: “Yeah, I think the first milestone could be ready in about six months.”

Boss: “Sorry, that’s been decided, go-live is in 1.5 days.”I’m in the middle of a re-watch of Northern Exposure. Maurice, in his prime, more or less embodied this sentiment.

That guy fucks.

lol they literally do none of those things

They most likely do play video games, games are a really space efficient activity.

As for fucking, well, officially not but…

Bill Oefelein and Lisa Nowak absolutely banged in space. Zero-G orgasms will drive a person to crime.

Apparently people’s sex lust goes down quite a lot when in space and it’s apparently hard to get hard.

But at least some have tried.

Yeah, but sex is much more than just PIV

Based.

How do you get Google to give you AI overview responses? I just get the old style search results.

chat is this real

Removed by mod